I transferred from TensorFlow recently. In this tutorial, there is a snippet:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

return x

I have two questions:

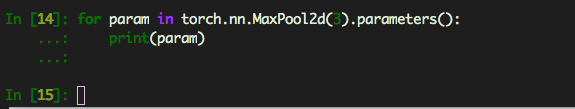

- Will the max pooling in two different layers share the same weights?

- If not, how would PyTorch know they are different?

Thank you in advance.