Dear all,

I have a Pruned Quantized MobileNet v2 model,

and is now trying to simulate its inference from scratch.

Here is a brief sample of my code:

(not the actual code, but very similar)

(m is the layer)

# scan through feature map _xin

for _bgn_y in range(_xin_h):

for _bgn_x in range(_xin_w):

all_channels = 0.0

# scan through kernel

for _kin in range(_w_input_ch):

_ftmp = 0.0

for _x in range(_w_kernel):

for _y in range(_w_kernel):

fx = torch.dequantize(xin).numpy()[args.img_in_batch][_kin][_bgn_y+_y]_bgn_x+_x]

fw = torch.dequantize(m.weight()).numpy()[_kout][_kin][_y][_x]

_ftmp += (fw*fx)

all_channel += _ftmp

out[_kout][_bgn_y][_bgn_x] = (_fall_channel + m.bias()[_kout])/m.scale + m.zero_point

I assumed the results would be exactly the same as PyTorch’s Quantized Model.

However, it’s 99.999975% the same:

Out of the 40,million Feature Map parameters, only 1 or 2 points is off by 1.

After some investigation, I’ve found the points off were .5 values,

and are all randomly distributed.

Ex:

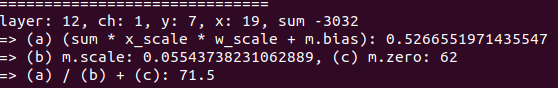

My Calculation:

pyTorch’s Output:

72

It seems like its a rounding issue, so I have tried different Rounding methods, but all in vain.

(I’m currently using the Bankers Rounding, the one python3 uses)

Any help would be appreciated!

Thanks

Best wishes,

James