Hello

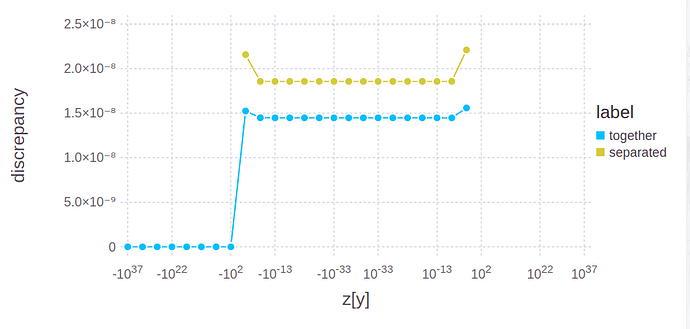

I am user from Caffe framework. In caffe, softmax and loss are combined as SoftmaxWithLoss for numerical stability. Though PyTorch also provides a similar function F.cross_entropy(), I notice it actually is implemented by two separate functions instead of a united one. Will it lead to numerical stability problems?