Hi, I am currently working on extending an existing model with some type of recurrent connections to ensure for temporal stability between frames of a sequence.

When training my existing model I get normal results, as expected.

The network I’m working with exists of different modules, one of which is a U-net like module. I have added my recurrent connections to this part of the network at the encoder side of the U-net.

I have done this by simply supplying the output from the previous frame as extra input to each level of the encoder part.

My forward pass of the ConvChain at each level of encoder part of my U-net:

def forward(self, x):

if type(self.hidden_tensor) == type(None):

self.hidden_tensor = self.init_hidden(x)

for idx, m in enumerate(self.children()):

if idx == 0:

x = m(th.cat([x, self.hidden_tensor], 1))

else:

x = m(x)

self.hidden_tensor = x

self.hidden_tensor.detach_()

return x

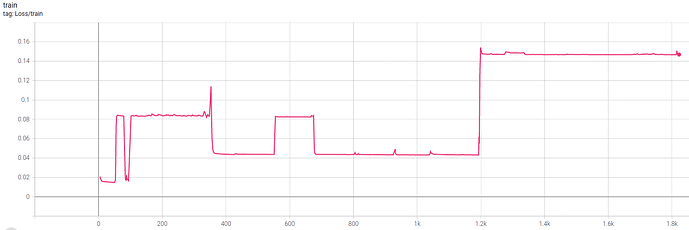

The problem is that my loss goes crazy as seen in the plot below:

My question now is if this is not the way to go with just adding the recurrent connection as extra input? Or how should I approach this?

Kind regards,

Emil