Hi,

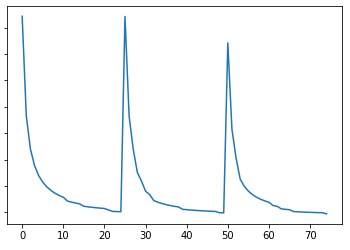

I’ve been working on an RNN model to predict the next word, but something weird is hapenning with the loss. It decreases within an epoch, but when a new epoch starts, it go back to the beginning. Here is my code:

for epoch in range(n_epochs):

current_loss = 0

current_loss_test = 0

random.shuffle(batches)

for i,batch in enumerate(batches):

loss = torch.zeros(1,dtype=torch.float,requires_grad=True)

hidden_1 = rnn_1.initHidden()

optimizer_1.zero_grad()

for tensor in batch[0]: #For each word

output_1, hidden_1 = rnn_1(tensor, hidden_1)

loss = two_dim_loss(output_1,output_2,batch[1], hidden_1,hidden_2)

loss.backward()

optimizer_1.step()

hidden_1s.append(hidden_1)

current_loss += loss.item()

The graph shows the effect that I’m talkign about: