Hi @tom, @ptrblck, any plans to do a notebook for StyleGAN2?

I actually have half of it lying around somewhere, but it seems even harder to get to anything than before…

I’ve been able to follow along with the notebook very well. Thanks so much! I’m trying to see if I can instantiate a different version of the generator with different parameters (I want it to generate 512x512 images instead of 1024x1024). I’m trying to only load the pretrained model layers that pertain to the smaller generator, but it doesn’t exactly work that way, I’m finding out.

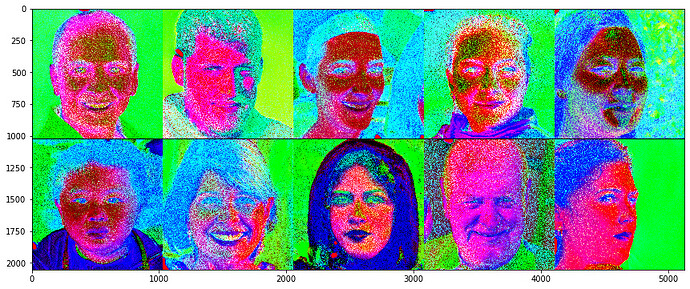

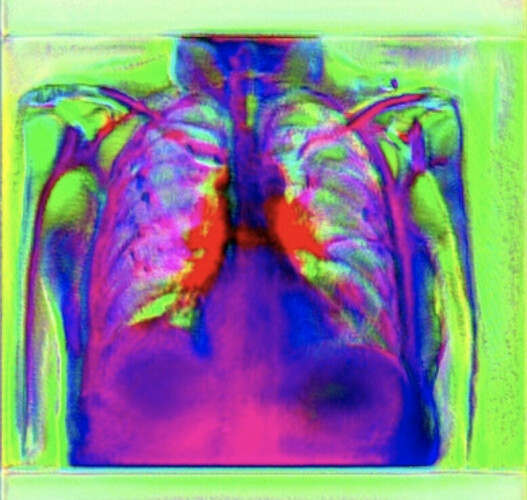

The full model parameters seem to lack the correctly sized ‘torgb’ weight that my smaller generator needs. In the case of the large generator, it seems as though the ‘torgb’ weight and bias is meant to apply on the last layer to de-normalize the 1024x1024 images so they don’t look like this (the images look good if I don’t remove ‘torgb’ from the state dict)

This was really helpful. Thank you so much. Can we get the pre-trained discriminator weights in pytorch as well?

You might be able to reuse the methods from the linked notebook and try to map the pretrained parameters to the discriminator or use this notebook from @tom.

We are using this notebook to convert a pretrained StyleGAN model (trained on official Nvidia implementation and we didnt change any parameter, just used default parameters and default code). However, we get strange outputs like this. W

Any idea why this is happening?In earlier posts we saw that someone had the same problem; but we played with torgb() weights but it didnt help…

I am facing the same issue when I trained to convert model that is smaller size. I have a 128x128 model an my results also look whit the wrong color. Did anyone managed to fix this issue?