Hello,

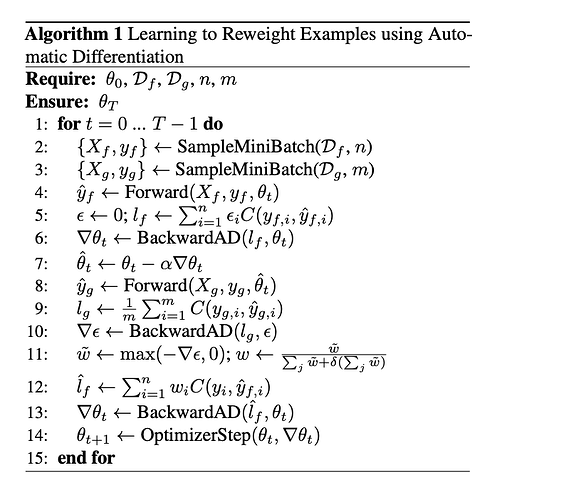

I’m currently implementing a project for my machine learning course, and i have faced an issue in gradient computation based on additional trainable parameters. I’m trying actually to implement the algorithm

with the help also of a github project that i’m taking as reference.

I’m receving “RuntimeError: One of the differentiated Tensors appears to not have been used in the graph. Set allow_unused=True if this is the desired behavior” during step 10 “Computation of gradients w.r.t epsilon”

The piece of my code that implements from step 1 to step 10 is as follows

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(net.parameters(), lr=gamma, momentum=rho)

for epoch in range(T):

running_loss = 0.0

for idx, (train_x, train_label) in enumerate(trainloader):

net.train()

# initialize a dummy network for the meta learning of the weights

meta_net = LeNet().to(device)

meta_net_optimizer = torch.optim.SGD(meta_net.parameters(), lr=gamma, momentum=rho)

# Step 4 - 5 forward pass to compute the initial weighted loss

# Forward propagation

outputs = meta_net(train_x)

# Error evaluation

train_label = train_label.squeeze()

loss = criterion(outputs,train_label.long())

eps = to_var(torch.zeros(loss.size()))

l_f_meta = torch.sum(loss * eps)

meta_net.zero_grad()

# Line 6 perform a parameter update

grads = torch.autograd.grad(l_f_meta, meta_net.parameters(), create_graph=True)

meta_net_optimizer.step()

# Line 8 - 10 2nd forward pass and getting the gradients with respect to epsilon

y_g_hat = meta_net(test_data_t)

l_g_meta = criterion(y_g_hat,test_labels_t.long())

grad_eps = torch.autograd.grad(l_g_meta, eps, only_inputs=True) # >> Step 10

Could you advise please why i’m receiving that? Supposedly, epsilon is a tensor. Issue is in

grad_eps = torch.autograd.grad(l_g_meta, eps, only_inputs=True). My target is to