The code-

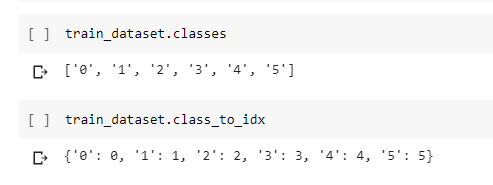

def train_model(n_epoch, data):

Encoder.train()

Decoder.train()

best_acc1 = 0

iter = 0

for epoch in tqdm(range(n_epoch)):

for i, (images, labels) in tqdm(enumerate(data['train'])):

if torch.cuda.is_available():

images = images.cuda().float()

labels = labels.cuda()

else:

images = Variable(images)

labels = Variable(labels)

# print('works')

# Clear gradients w.r.t. parameters

optimizer.zero_grad()

# Forward pass to get output/logits

features = Encoder(images)

features = features.unsqueeze_(1)

outputs = Decoder(features)

# Calculate Loss: softmax --> cross entropy loss

loss = criterion(outputs, labels)

# Getting gradients w.r.t. parameters

loss.backward()

# Updating parameters

optimizer.step()

# print('Optimizer')

iter += 1

if iter % 500 == 0:

# print("iter")

# Calculate Accuracy

accuracy_v = data_accuracy(data, 'valid')

is_best = accuracy_v > best_acc1

best_acc1 = max(accuracy_v, best_acc1)

save_checkpoint({

'epoch': n_epoch,

'iter': iter,

'state_dict_encoder': Encoder.state_dict(),

'state_dict_decoder': Decoder.state_dict(),

'best_acc1': best_acc1,

'optimizer' : optimizer.state_dict(),

}, is_best)

# print("test")

# accuracy_t = data_accuracy(data, 'train')

# Print Loss

print('Iteration: {}. Loss: {}. Accuracy {}'.format(iter, loss.item(), accuracy_v))

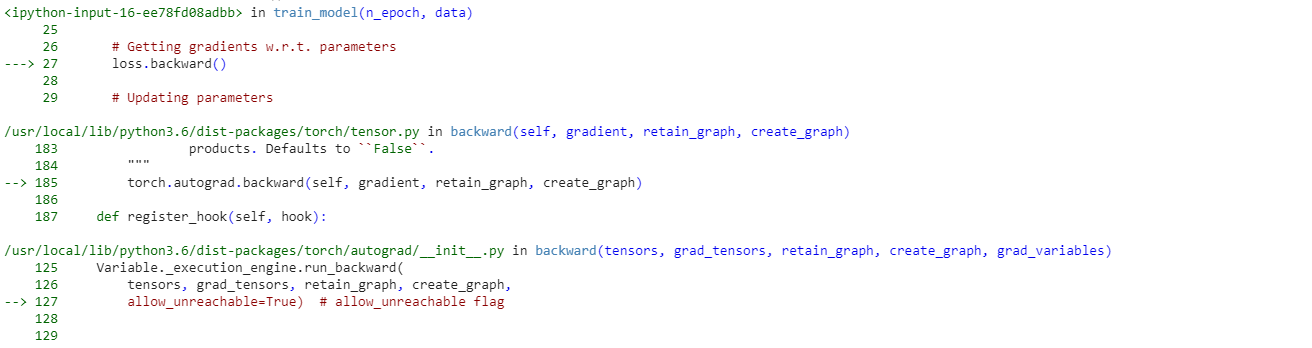

Error message -

RuntimeError: CUDA error: device-side assert triggered

Exception raised from launch_vectorized_kernel at /pytorch/aten/src/ATen/native/cuda/CUDALoops.cuh:146 (most recent call first):

frame #0: c10::Error::Error(c10::SourceLocation, std::string) + 0x42 (0x7fd6a31681e2 in /usr/local/lib/python3.6/dist-packages/torch/lib/libc10.so)

frame #1: void at::native::gpu_kernel_impl<__nv_hdl_wrapper_t<false, false, __nv_dl_tag<void (*)(at::TensorIterator&, c10::Scalar), &at::native::add_kernel_cuda, 4u>, float (float, float), float> >(at::TensorIterator&, __nv_hdl_wrapper_t<false, false, __nv_dl_tag<void (*)(at::TensorIterator&, c10::Scalar), &at::native::add_kernel_cuda, 4u>, float (float, float), float> const&) + 0xe03 (0x7fd6a4f37933 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cuda.so)

frame #2: void at::native::gpu_kernel<__nv_hdl_wrapper_t<false, false, __nv_dl_tag<void (*)(at::TensorIterator&, c10::Scalar), &at::native::add_kernel_cuda, 4u>, float (float, float), float> >(at::TensorIterator&, __nv_hdl_wrapper_t<false, false, __nv_dl_tag<void (*)(at::TensorIterator&, c10::Scalar), &at::native::add_kernel_cuda, 4u>, float (float, float), float> const&) + 0x11b (0x7fd6a4f3934b in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cuda.so)

frame #3: void at::native::gpu_kernel_with_scalars<__nv_hdl_wrapper_t<false, false, __nv_dl_tag<void (*)(at::TensorIterator&, c10::Scalar), &at::native::add_kernel_cuda, 4u>, float (float, float), float> >(at::TensorIterator&, __nv_hdl_wrapper_t<false, false, __nv_dl_tag<void (*)(at::TensorIterator&, c10::Scalar), &at::native::add_kernel_cuda, 4u>, float (float, float), float> const&) + 0xeb (0x7fd6a4f395bb in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cuda.so)

frame #4: <unknown function> + 0x192a486 (0x7fd6a4efb486 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cuda.so)

frame #5: at::native::add_kernel_cuda(at::TensorIterator&, c10::Scalar) + 0x1a (0x7fd6a4efc1fa in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cuda.so)

frame #6: <unknown function> + 0xbce25e (0x7fd6dad8b25e in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #7: at::native::add_out(at::Tensor&, at::Tensor const&, at::Tensor const&, c10::Scalar) + 0x71 (0x7fd6dad81b61 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #8: <unknown function> + 0xf3b932 (0x7fd6a450c932 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cuda.so)

frame #9: <unknown function> + 0x2e9fad8 (0x7fd6dd05cad8 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #10: <unknown function> + 0x3377258 (0x7fd6dd534258 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #11: torch::autograd::AccumulateGrad::apply(std::vector<at::Tensor, std::allocator<at::Tensor> >&&) + 0x38a (0x7fd6dd535aaa in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #12: <unknown function> + 0x3375bb7 (0x7fd6dd532bb7 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #13: torch::autograd::Engine::evaluate_function(std::shared_ptr<torch::autograd::GraphTask>&, torch::autograd::Node*, torch::autograd::InputBuffer&, std::shared_ptr<torch::autograd::ReadyQueue> const&) + 0x1400 (0x7fd6dd52e400 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #14: torch::autograd::Engine::thread_main(std::shared_ptr<torch::autograd::GraphTask> const&) + 0x451 (0x7fd6dd52efa1 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #15: torch::autograd::Engine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x89 (0x7fd6dd527119 in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_cpu.so)

frame #16: torch::autograd::python::PythonEngine::thread_init(int, std::shared_ptr<torch::autograd::ReadyQueue> const&, bool) + 0x4a (0x7fd6eacc734a in /usr/local/lib/python3.6/dist-packages/torch/lib/libtorch_python.so)

frame #17: <unknown function> + 0xbd6df (0x7fd7076806df in /usr/lib/x86_64-linux-gnu/libstdc++.so.6)

frame #18: <unknown function> + 0x76db (0x7fd7087626db in /lib/x86_64-linux-gnu/libpthread.so.0)

frame #19: clone + 0x3f (0x7fd708a9ba3f in /lib/x86_64-linux-gnu/libc.so.6)

My code runs fine till like 366 iteration. But after that this error is shown? Any idea what this is? I can’t debug this cause the code seem to run fine untill a certain iteration.

(I am running it in google colab)