Hey.

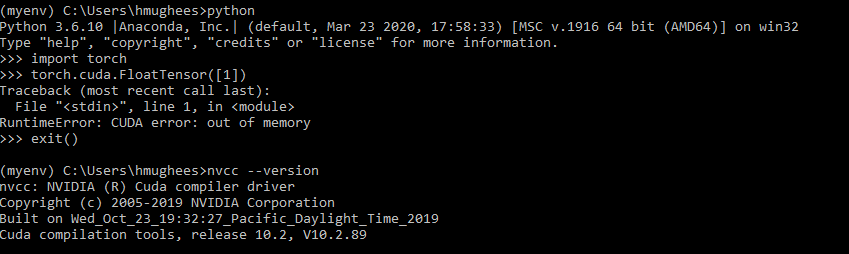

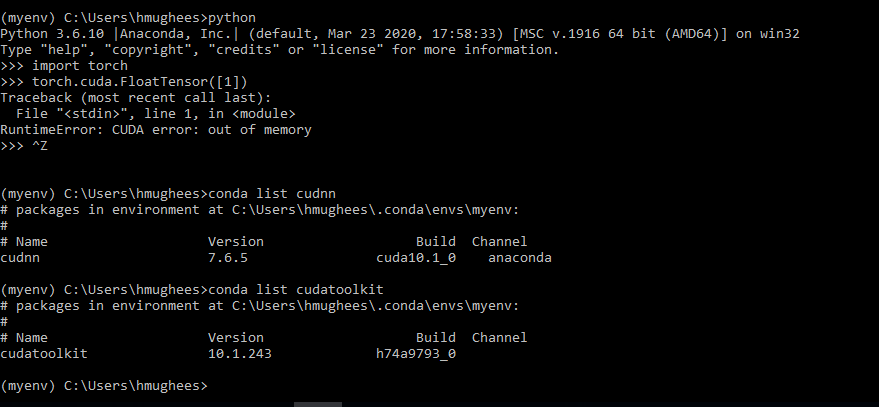

I started working on a new workplace virtual system and did all the necessary installations. But currently, I am unable to create a simple cuda variable. I don’t know what requirement is I am lacking.

I am using Windows Server 2016 and just installed latest official pytorch

Attached is the screenshot and any guidance is highly appreciated.

thanks alot.