I know this has been asked before: ValueError: Expected target size (32, 7), got torch.Size([32]) but this didn’t solve my problem.

when calling “torch.nn.CrossEntropyLoss()”.

I get “RuntimeError: Expected target size [2, 30000], got [2]”

I saw in that post a comment where it’s explained that : " nn.CrossEntrolyLoss expects a model output in the shape [batch_size, nb_classes, *additional_dims] and a target in the shape [batch_size, *additional_dims]". If that’s the case, I’m not sure why my targets/labels dimensions are only the batch size which is [2].

This is the model, I have 26 output classes:

from transformers import AutoTokenizer, AutoModelForMaskedLM

model = AutoModelForMaskedLM.from_pretrained("asafaya/albert-base-arabic")

hidden_size = model.config.hidden_size

model.classifier = torch.nn.Linear(in_features=hidden_size, out_features=26)

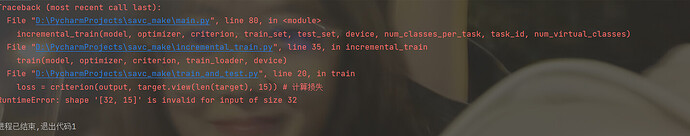

I get the error while training, when calling CrossEntropyLoss:

model.to(device)

# Set the model to train mode

model.train()

#46416/64 = 725.66

#num_training_steps set to 725 * 50 = 36283

#num_warmup_steps 36283 * 0.1 = 3628

num_warmup_steps = 3628

num_training_steps = 36283

num_epochs = 50

# Define the optimizer and the scheduler

optimizer = AdamW(model.parameters(), lr=0.001)

scheduler = get_linear_schedule_with_warmup(optimizer, num_warmup_steps=num_warmup_steps, num_training_steps=num_training_steps)

# Define the loss function

loss_fn = torch.nn.CrossEntropyLoss()

# Fine-tune the model

for epoch in range(num_epochs):

for step, batch in enumerate(train_dataloader):

# Unpack the batch

input_ids, attention_masks, labels = batch

input_ids = input_ids.to(device)

attention_masks = attention_masks.to(device)

# Forward pass

logits = model(input_ids, attention_masks).logits

# Compute the loss (Here is where the error happens)

loss = loss_fn(logits, labels)

# Backward pass and optimization

optimizer.zero_grad()

loss.backward()

optimizer.step()

scheduler.step()

logits shape is: torch.Size([2, 256, 30000])

labels is: torch.Size([2])

Thank you!