class Generator(nn.Module):

def init(self):

super(Generator, self).init()

self.model = nn.Sequential(

nn.Linear(NOISE_DIM, 4096),

nn.LeakyReLU(0.2),

nn.Linear(4096, 8192),

nn.LeakyReLU(0.2),

nn.Linear(8192, 4096),

nn.LeakyReLU(0.2),

nn.Linear(4096, 512),

nn.LeakyReLU(0.2)

)

def forward(self, x):

return self.model(x)

class Discriminator(nn.Module):

def init(self):

super(Discriminator, self).init()

self.model = nn.Sequential(

nn.Linear(NOISE_DIM, 4096),

nn.LeakyReLU(0.2),

nn.Linear(4096, 8192),

nn.LeakyReLU(0.2),

nn.Linear(8192, 4096),

nn.LeakyReLU(0.2),

nn.Linear(4096, 1),

nn.ReLU()

)

def forward(self, x):

return self.model(x)

class Classifier(nn.Module):

def init(self):

super(Classifier, self).init()

self.model = nn.Sequential(

nn.Linear(NOISE_DIM, 4096),

nn.LeakyReLU(0.2),

nn.Linear(4096, 8192),

nn.LeakyReLU(0.2),

nn.Linear(8192, 4096),

nn.LeakyReLU(0.2),

nn.Linear(4096, 64),

nn.Sigmoid()

)

def forward(self, x):

return self.model(x)

#loss functions

cross_entropy = torch.nn.BCELoss()

def cos_dis_arr(a,b):

dis_=

for i in range(len(a)):

d =distance.cosine(a[i].detach().numpy(),b[i].detach().numpy())

dis_.append(d)

return(np.array(dis_).mean())

def discriminator_loss(real_features,generated_features,real_output, fake_output):

real_loss = cross_entropy(torch.ones_like(real_output), real_output).clone()

fake_loss = cross_entropy(torch.zeros_like(fake_output), fake_output).clone()

total_loss = real_loss.clone() + fake_loss.clone() #+ 0.01*cos_dis_arr(real_features,generated_features)

#print(total_loss.shape)

return total_loss

def classfier_loss(y_true,y_pred):

return cross_entropy(y_true.double(), y_pred.double())

def generator_loss(real_features,generated_features,y_true,y_pred):

#print(cross_entropy(tf.ones_like(fake_output), fake_output).shape)

a= cross_entropy(y_true.double(), y_pred.double())

b= cos_dis_arr(real_features,generated_features)

c = cross_entropy(torch.ones_like(generated_features), generated_features)

wandb.log({“cross_entrophy”: a, “distance”: b, “discriminator_loss”: c})

return a + c + b

Initialize models and optimizers

generator = Generator()

discriminator = Discriminator()

classifier = Classifier()

generator_optimizer = optim.Adam(generator.parameters(), lr=1e-4, betas=(BETA1,BETA2))

discriminator_optimizer = optim.Adam(discriminator.parameters(), lr=1e-4, betas=(BETA1,BETA2))

classifier_optimizer = optim.Adam(classifier.parameters(), lr=1e-4, betas=(BETA1,BETA2))

def train_step(images, llb):

# 1 - Create noise tensors

noise2 = torch.normal(0,0.2,(BATCH_SIZE, noise_dim))

# 2 - Generate images and calculate loss values

generator_optimizer.zero_grad()

im__=torch.add(images,noise2)

generated_images = generator(im__)

gen_classifier = classifier(generated_images)

real_classifier = classifier(images)

real_output = discriminator(images)

fake_output = discriminator(generated_images)

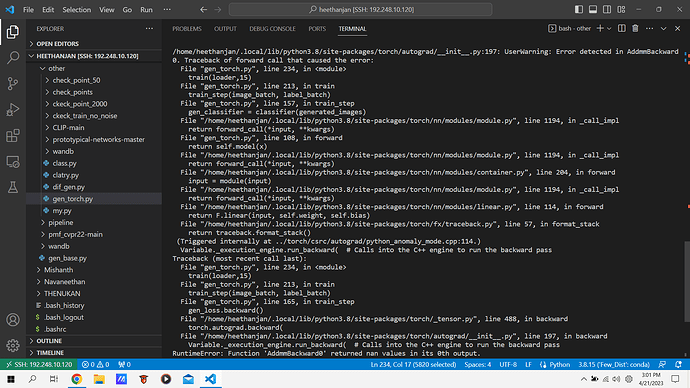

gen_loss = generator_loss(images, generated_images, llb, gen_classifier)

print("#####genLoss#######")

print(gen_loss)

gen_loss.backward()

generator_optimizer.step()

################

discriminator_optimizer.zero_grad()

im__=torch.add(images,noise2)

generated_images = generator(im__)

gen_classifier = classifier(generated_images)

real_classifier = classifier(images)

real_output = discriminator(images)

fake_output = discriminator(generated_images)

disc_loss = discriminator_loss(images, generated_images, real_output, fake_output)

disc_loss.backward()

discriminator_optimizer.step()

############

clss_loss = classfier_loss(llb, real_classifier)

gen_see = classfier_loss(llb, gen_classifier)

wandb.log({"gen_loss": gen_loss, "gen_see": gen_see, "clas_loss": clss_loss, "dic_loss_disc": disc_loss})

# 3 - Compute gradients and update weights for generator, discriminator and classifier

classifier_optimizer.zero_grad()

clss_loss.backward()

classifier_optimizer.step()

import time

feature generator

def syn_features(model,input_feature,num_of_sin_features):

new_features=

t_in = torch.reshape(input_feature, (1,512))

for i in range(num_of_sin_features):

noise22 = torch.normal(0,0.2,(1, 512))

f_2= torch.add(t_in,noise22)

#noise = tf.random.normal([1, 512])

#inp = tf.concat((f_2,noise),1)

model.eval()

fe = model(f_2)

new_features.append(fe)

return(new_features)

def train(loader,epochs):

for epoch in range(epochs):

start = time.time()

for image_batch, label_batch in loader:

train_step(image_batch, label_batch)

if (epoch + 1) % 3 == 0:

# There is no Checkpoint object in PyTorch; saving weights can be done as follows:

pass

print(‘Time for epoch {} is {} sec’.format(epoch + 1, time.time() - start))

print(“small Test”)

f = random.choice(features)

# noise = torch.randn([1, 512])

noise22 = torch.normal(0,0.2,(1, 512))

f_ = f.reshape((1, 512))

f_2 = f_ + noise22

# inp = torch.cat((f_, noise), 1)

generator.eval()

gen = generator(f_2)

t_in = gen.reshape((512, ))

d = distance.cosine(f, t_in)

print(f)

print(gen)

print(d)

train(loader,15)