Yes, i just get the error with your code snippet.

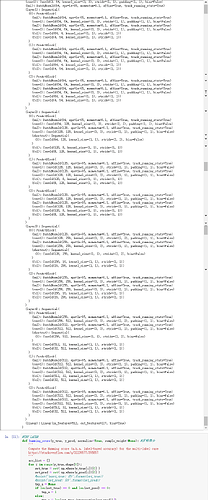

And here is the model now:

SENet(

(conv1): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(layer1): Sequential(

(0): PreActBlock(

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(fc1): Conv2d(64, 4, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(4, 64, kernel_size=(1, 1), stride=(1, 1))

)

(1): PreActBlock(

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(fc1): Conv2d(64, 4, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(4, 64, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer2): Sequential(

(0): PreActBlock(

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(shortcut): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

)

(fc1): Conv2d(128, 8, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(8, 128, kernel_size=(1, 1), stride=(1, 1))

)

(1): PreActBlock(

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(fc1): Conv2d(128, 8, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(8, 128, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer3): Sequential(

(0): PreActBlock(

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(shortcut): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

)

(fc1): Conv2d(256, 16, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(16, 256, kernel_size=(1, 1), stride=(1, 1))

)

(1): PreActBlock(

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(fc1): Conv2d(256, 16, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(16, 256, kernel_size=(1, 1), stride=(1, 1))

)

)

(layer4): Sequential(

(0): PreActBlock(

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(shortcut): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

)

(fc1): Conv2d(512, 32, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(32, 512, kernel_size=(1, 1), stride=(1, 1))

)

(1): PreActBlock(

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(fc1): Conv2d(512, 32, kernel_size=(1, 1), stride=(1, 1))

(fc2): Conv2d(32, 512, kernel_size=(1, 1), stride=(1, 1))

)

)

(linear): Linear(in_features=512, out_features=10, bias=True)

)