I’ve read all the similar topics here, but can’t figures this out. How do I change my labels (y_train) to the correct dimensions?

data = ImageFolder(data_dir, transform=transforms.Compose([transforms.Resize((224,224)),transforms.ToTensor()]))

trainloader = torch.utils.data.DataLoader(data, batch_size=3600,

shuffle=True, num_workers=2)

dataiter = iter(trainloader)

x_train, y_train = dataiter.next()

print(x_train.size())

print(y_train.size())

torch.Size([3600, 3, 224, 224])

torch.Size([3600])

class Net(torch.nn.Module):

def __init__(self):

super().__init__()

# here we set up the tensors......

self.layer1 = torch.nn.Linear(224, 12)

self.layer2 = torch.nn.Linear(12, 10)

def forward(self, x):

# here we define the (forward) computational graph,

# in terms of the tensors, and elt-wise non-linearities

x = F.relu(self.layer1(x))

x = self.layer2(x)

return x

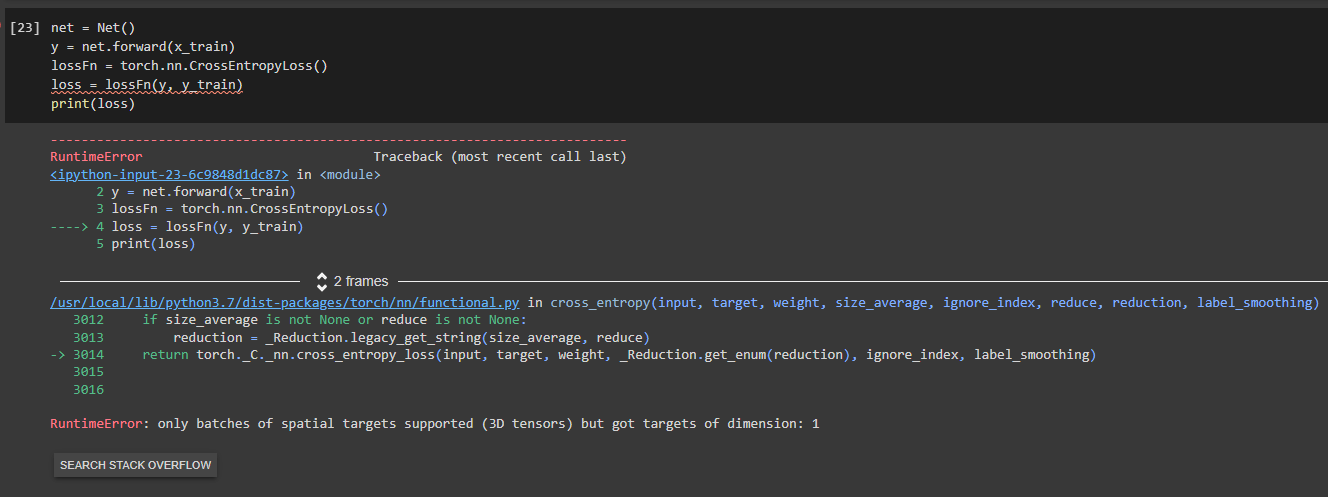

net = Net()

y = net.forward(x_train)

lossFn = torch.nn.CrossEntropyLoss()

loss = lossFn(y, y_train)

print(loss)