Hi all

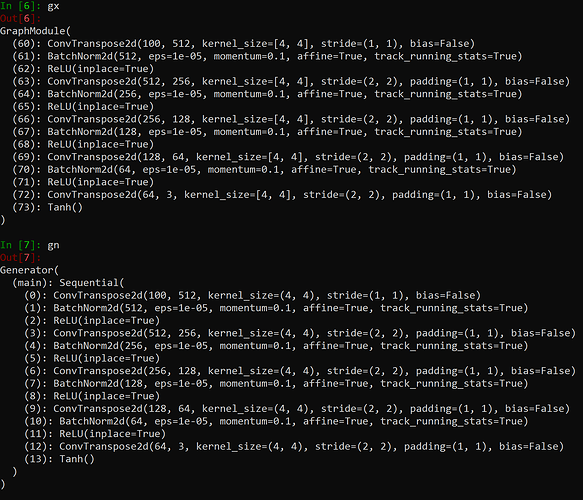

I have two different instantiations of a model, gn and gx, both consisting of following operators:

I instantiated gx using exactly the same parameters as gn (reference).

what I did:

- I passed the same input, operator for operator through both models and saved the intermediate results in lists (Sequential and custom class shouldn’t matter, right? hint that it doesn’t: other model works exactly using that setting)

- the results differ from the 5th last layer (from convTrans2d)

- the parameters of all operators are the same: checked using:

out_x = list(gx.parameters())

out_n = list(gn.parameters())

for i in range(len(out_x)):

print(torch.all(out_x[i].eq(out_n[i])).item(), torch.allclose(out_x[i], out_n[i]))

they are all true (same paramters)

- storing the intermediate results of each layer in out_x for gx and out_n for gn and comparing the differences I get following:

In [12]: [torch.sum(torch.abs(out_x[i] - out_n[i])).item() for i in range(len(out_x))]

Out[12]:

[0.0,

0.0,

0.0,

0.0,

0.0,

0.0,

0.0,

0.0,

0.0,

0.0,

0.00011764606460928917,

0.00026547431480139494,

0.00026547431480139494,

0.0001205947482958436,

0.00011362379882484674]

Does anyone have a clue what the reason could be? I don’t unterstand why it changes suddenly in the end, if parameters would be the cause it could differ earlier?

The other model (similar size & operators) also has slightly different hyperparamters e.g. alphas of LeakyReLu but still manages to produce equal outputs…

Any ideas are highly appreciated!