docs:

torch.nn.KLDivLoss

which I think should be:

Hi Kabu!

No, the documentation for KLDivLoss is correct.

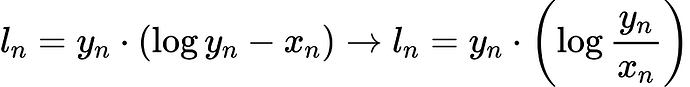

The equation you posted an image of contains the term:

y_n (log y_n - x_n)

You are expecting log x_n rather than just x_n. But KLDivLoss

expects x_n to be already a log-probability. Quoting from the

documentation you linked to:

As with

NLLLoss, the input given is expected to contain log-probabilities and is not restricted to a 2D Tensor. The targets are interpreted as probabilities by default, but could be considered as log-probabilities withlog_targetset toTrue.

Best.

K. Frank

2 Likes