I have a model, FCN8s, that makes a binary segmentation for a dataset with random objects, person. I obtain the segmantation I have like, 59% Intersection over Union accuracy.

After this i take the weights and try to segmentate brain tumors.

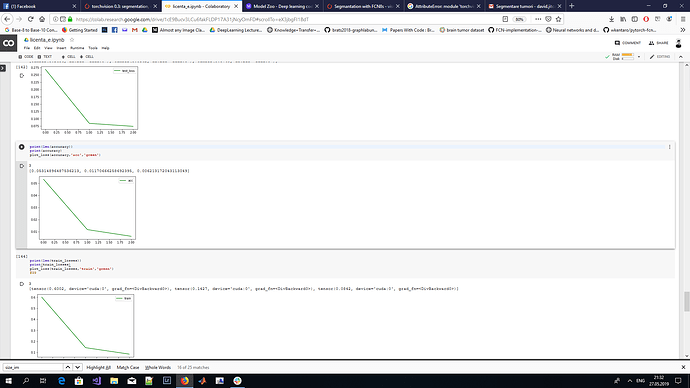

The train loss is decreasing, test loss is decreasing and accuracy (intersection over union) is decreasing (it doesnt exist to say ) 0.03% …Anyone have an idea?

Accuracy shouldn’t decrease. Do you mind posting your loss function?

I am using CrossEntropy loss.

First plot test loss, accuracy and last plot train loss. After three epochs

Change your metrics and loss.

Metric - Dice or IOU

loss - Dice

Give it a try.

As I say, im using IOU accuracy… But on the other dataset i managed to obtain 59%, i dont understand why on this doesnt work

Different problems require different metrics.

Hi, i found this los

def dice_loss(logits, true, eps=1e-7):

"""Computes the Sørensen–Dice loss.

Note that PyTorch optimizers minimize a loss. In this

case, we would like to maximize the dice loss so we

return the negated dice loss.

Args:

true: a tensor of shape [B, 1, H, W].

logits: a tensor of shape [B, C, H, W]. Corresponds to

the raw output or logits of the model.

eps: added to the denominator for numerical stability.

Returns:

dice_loss: the Sørensen–Dice loss.

"""

num_classes = logits.shape[1]

if num_classes == 1:

true_1_hot = torch.eye(num_classes + 1)[true.squeeze(1)]

true_1_hot = true_1_hot.permute(0, 3, 1, 2).float()

true_1_hot_f = true_1_hot[:, 0:1, :, :]

true_1_hot_s = true_1_hot[:, 1:2, :, :]

true_1_hot = torch.cat([true_1_hot_s, true_1_hot_f], dim=1)

pos_prob = torch.sigmoid(logits)

neg_prob = 1 - pos_prob

probas = torch.cat([pos_prob, neg_prob], dim=1)

else:

true_1_hot = torch.eye(num_classes)[true.squeeze(1)]

true_1_hot = true_1_hot.permute(0, 3, 1, 2).float()

probas = F.softmax(logits, dim=1)

true_1_hot = true_1_hot.type(logits.type())

dims = (0,) + tuple(range(2, true.ndimension()))

intersection = torch.sum(probas * true_1_hot, dims)

cardinality = torch.sum(probas + true_1_hot, dims)

dice_loss = (2. * intersection / (cardinality + eps)).mean()

return (1 - dice_loss)

And …my network doesnt improve…:(( the loss comes down from 0.64 to 0.50 after first batch and its decreasing very slow, (but after the third decimal 0,50x ), in conclusion the mean of loss its 0,5 …

How long have you let your network train?

I have trained for six epochs, but after the first iteration the predicted pixels are 0, and accuracy 0 (this happend also with cross). I have tried Adam with 0,001 … 0,000001 lr. With sgd after 6 epochs i have had an accuracy of 0,07 very small but wasnt improving the loss decrease very slow. I have rised the lr to 0.1 and the the accuracy become 0.

Try it for a longer period of time. Because maybe your network is just learning that slow.

Try adam : 0.005 with learning rate decay

Try SGD : 0.05 with learning rate decay

for lets say 30 epochs. If it doesn’t work, then maybe we gotta debug the problem more.

Also my images are 512x512 and i use a batch of 10 images(it uses the full colab gpu)and it takes like 7 minute per epoch.

So i reduce image to 256x256 or 128x128… but not the resize from pytorch which use interpolation, i take pixel from 4 to 4 on colums and rows (that for 128x128) or 2 by 2 (for 256x256) to train with a batch of 30. I dont think this is a big problem, this was recommended by a teacher.

And i used scheduler for adam lr, but if i use scheduler after every epcoch it doenst change the lr, but if i use after iterations its changes too much like lr = 0.0000005

use a scheduler with a validation metric

Setting up an optimizer lr reducer

scheduler = ReduceLROnPlateau(optimizer, mode='max', factor=0.5,

patience=int(params['patience']),

verbose=True)

# Making sure that the learning rate scheduler keeps track

def testfunc():

"""

.................

"""

scheduler.step(test_average_loss)

Try this for lr decay.

Hmm I’m using pytorch, how to use a scheduler with validation metric?

This is in PyTorch

scheduler.step(validation_metric_in_here_as_a_number_probably)

Just type your validation metric in here.