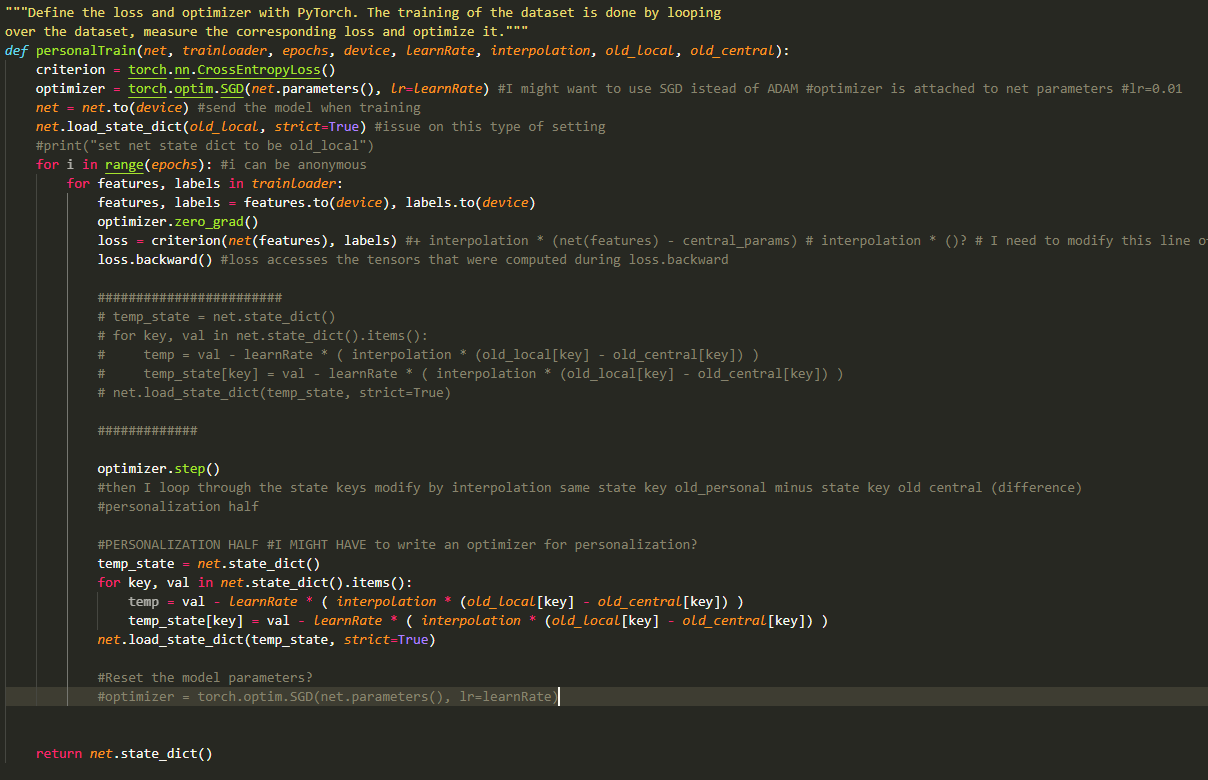

I am currently working on implementing the Ditto personalized federated learning algorithm over human activity recognition data (HAR), however I am struggling to update local model parameters/weights. Without the personlization step in my code, local models have ~55% accuracy while after personalization accuracy seems almost random and very low ~7.

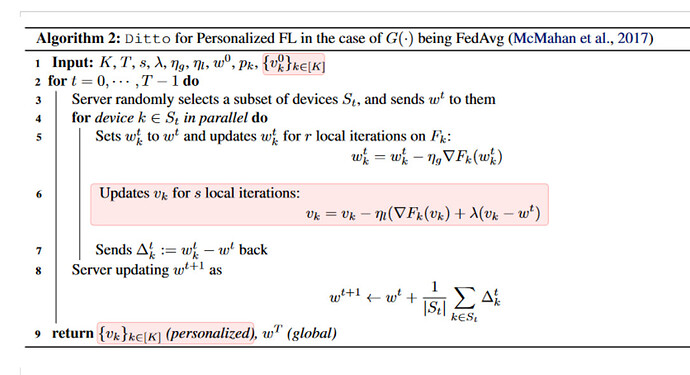

Local updates are computed by → v_k = v_k - lr (SGD(v_k) + interpolation(v_k - global_weights) )

If interpolation is 0 → local training is just SGD

I’m currently stuck on line six. I want to want to select new parameter values for my local model based on SGD and the difference between global model weights and original local model weights.

Currently I run one step of SGD and then slightly modify the model parameters by lr*interpolation(local_weights - global weights). I think this might be affecting my optimizer. Is the only way to implement line six of the algorithms to create a custom optimizer?