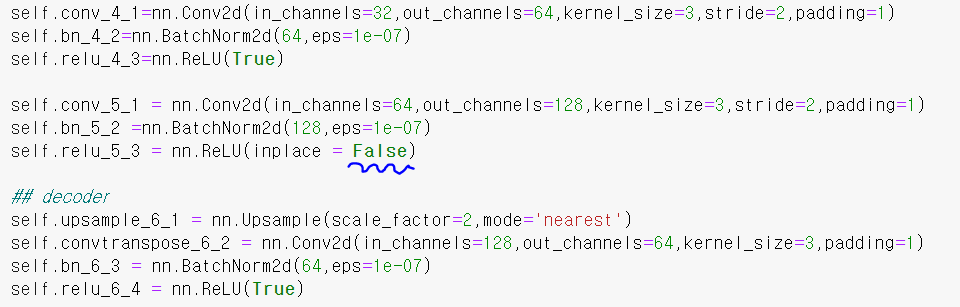

When I training my network, I want to set a specific part of latent space into the zero value based on the label.

For example, after input values come out from convolution layer, there would be (B, C, H, W) 4-D tensor value would come out.

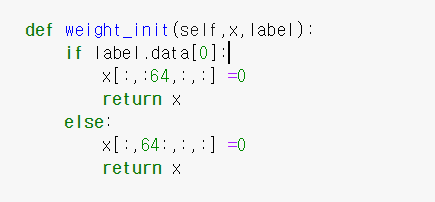

I want to make half of the Channel part into zero based on the label.

Like this but I got an error like this.

RuntimeError: one of the variables needed for gradient computation has been modified by an inplace operation: [torch.cuda.FloatTensor [1, 128, 15, 15]], which is output 0 of ReluBackward1, is at version 2; expected version 1 instead. Hint: the backtrace further above shows the operation that failed to compute its gradient. The variable in question was changed in there or anywhere later. Good luck!

How can I assign a specific part of the tensor value into zero?