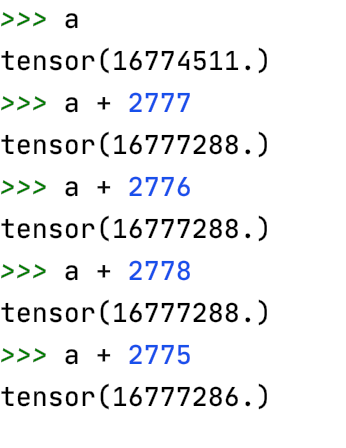

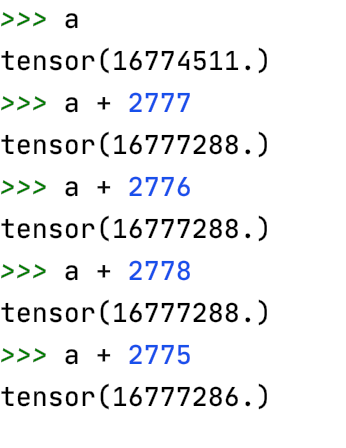

why would such thing happen and how to avoid this?

why would such thing happen and how to avoid this?

Hi,

Actually, this is not a skipped bug or something like that. Numpy also has same semantic and it is due to precision of floating point numbers.

You can find some information about dtypes wrt to this post by using

i = torch.finfo(torch.float32)

i.eps

Bests

Yeah. Seems setting a to torch.double could solve the problem. Thanks.