EDITED: You have to run more than 1 batch for the other gpu to kick in or other wise it will idle. Gosh I feel stupid…

I have downloaded the tutorial Jupiter notebook on data parallelism and I know it works because it gave me the correct outputs for 2 gpu setup. I followed the same idea in my custom code which build on top of cyclegan. In the net work itself there are 2 generator and 2 discriminators, so I did:

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

netG_A2B = Generator(opt.input_nc, opt.output_nc, opt.ngf, opt.residual_num)#.cuda()

netG_B2A = Generator(opt.output_nc, opt.input_nc, opt.ngf, opt.residual_num)#.cuda()

netD_A = Discriminator(opt.input_nc, opt.ndf)#.cuda()

netD_B = Discriminator(opt.output_nc, opt.ndf)#.cuda()

netG_A2B.apply(weights_init_normal)

netG_B2A.apply(weights_init_normal)

netD_A.apply(weights_init_normal)

netD_B.apply(weights_init_normal)

netG_A2B = nn.DataParallel(netG_A2B)

netG_B2A = nn.DataParallel(netG_B2A)

netD_A = nn.DataParallel(netD_A)

netD_B = nn.DataParallel(netD_B)

netG_A2B.to(device)

netG_B2A.to(device)

netD_A.to(device)

netD_B.to(device)

I have also applied my inputs with to.device() like what is shown in the tutorial notebook, there inputs where later feed into the corresponding netGs:

for idx, _ in enumerate(dataloader):

realA = _['portrait'].to(device)

realB = _['lineart'].to(device)

I first get a warning saying

Anaconda3\lib\site-packages\torch\cuda\nccl.py:24: UserWarning: PyTorch is not compiled with NCCL support

warnings.warn(‘PyTorch is not compiled with NCCL support’)

Quick googling shows its an warning related to multi gpu support?

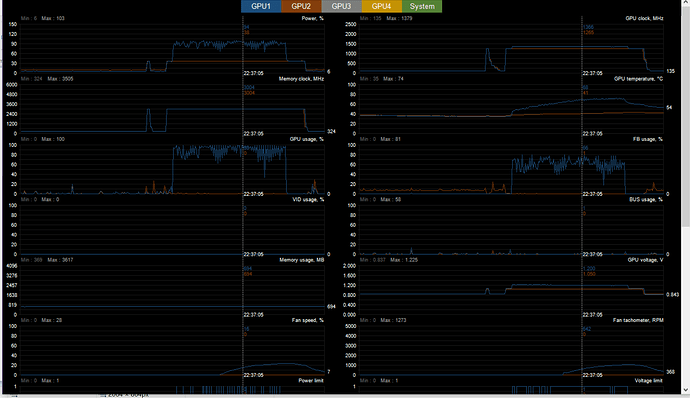

If I check my graphics card monitoring app I see

You can see both GPU clocks are maxed in the 1st panel on right side, however if you check the second panel on the left side you see only one GPU usage is at 99% while the other idles at 0%?

I am currently trying to test out speed for a single card but I am running into out of memory error…