I trained a model using PyTorch and I stored the weights in which it had the minimum validation loss during training. Also, I stored the optimizer weights on that time. So, I trained my model for the second time by loading the weights that I stored from the first time. I wanted to test my model and generate the outputs of my model.

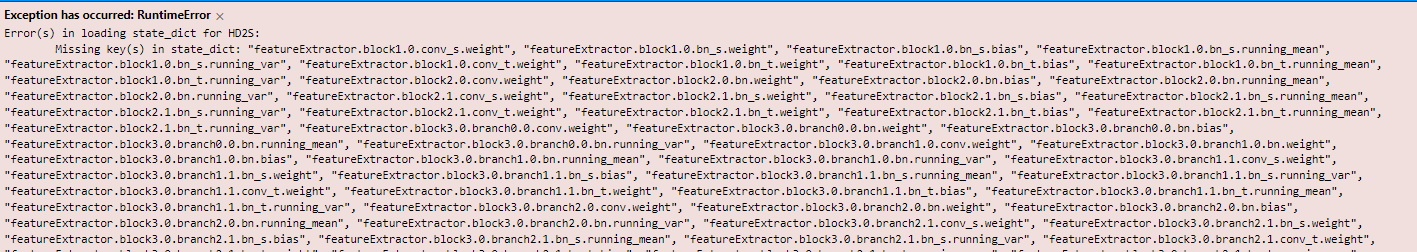

But, unfortunately, I faced with such an error:

When I wanted to load the weights for the second_time training I used the following scripts:

model = nn.DataParallel(model, device_ids = [0,1],output_device= [0,1])

model = model.to(dev)

model.load_state_dict(torch.load(path_to_the_saved_model's_weights, map_location=dev))

optimizer = torch.optim.Adam(model.parameters(), lr=lr, weight_decay=2e-7)

# loading optimizer

optimizer.load_state_dict(torch.load(path to the saved optimizer's weights))

I only changed learning rate value during the second_time training and when I want to test the model and generating the output of the model by loading the new weights I see such an error. How can I solve the problem?