I am writing a relativistic GAN. I have the following generator update function

def optimize_generator(self, real_batch, fake_batch):

"""

relativistic generator update step

"""

valid = torch.ones(real_batch.size(0), device = self.device)

fake = torch.zeros(fake_batch.size(0), device = self.device)

y_pred = self.netD(real_batch)

y_pred_fake = self.netD(fake_batch.detach())

print("(y_pred- torch.mean(y_pred_fake)).squeeze().size() : ", (y_pred- torch.mean(y_pred_fake)).squeeze().size())

print("(y_pred_fake - torch.mean(y_pred)).squeeze().size() : ", (y_pred_fake - torch.mean(y_pred)).squeeze().size())

print("fake.size() : ", fake.size())

print("valid.size() : ", valid.size())

print((y_pred- torch.mean(y_pred_fake)).squeeze().size() == fake.size())

print((y_pred_fake - torch.mean(y_pred)).squeeze().size() == valid.size())

real_v_fake = self.loss((y_pred- torch.mean(y_pred_fake)).squeeze(), fake)

fake_v_real = self.loss((y_pred_fake - torch.mean(y_pred)).squeeze(), valid)

g_loss = (real_v_fake + fake_v_real)/2

"""

gradient update pass

"""

self.gen_optim.zero_grad()

g_loss.backward()

self.gen_optim.step()

return g_loss

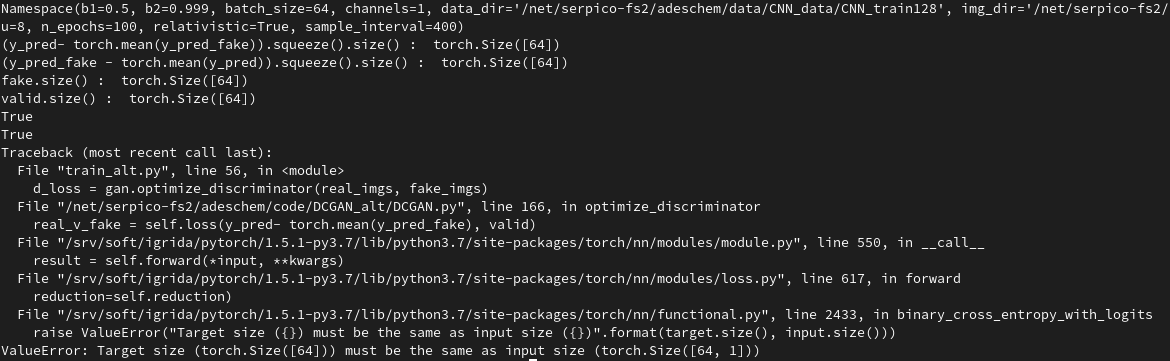

I have a very strange issue with the dimensions of the input and the target, linked in the following console output :

as you can see, the size of the input and target is the same torch.size([64]) and a boolean check of equality even succeeds, but BCEWithLogitsLoss keeps throwing this error. I had a look at the torch/nn/functional.py code, and I seem to be failing this exact check (line 2433) :

if not (target.size() == input.size()):

raise ValueError("Target size ({}) must be the same as input size ({})".format(target.size(), input.size()))

I am at a bit of a loss, because I don’t see how that’s different from the boolean equality I tested before the call to BCEWithLogitsLoss. Any help would be much appreciated ![]() !

!