Hi guys,

I am performing a multi-class image classification.

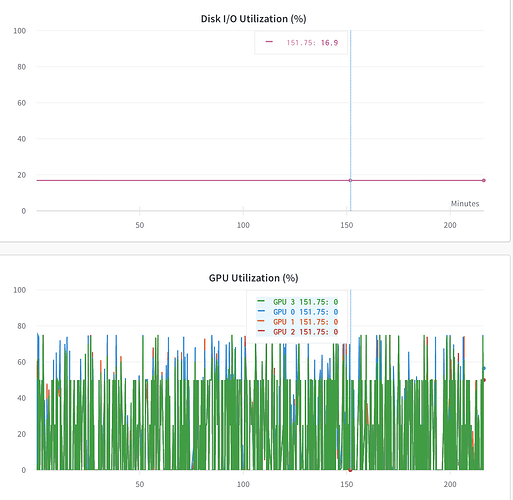

Below are my Disk IO and GPU utilisation.

Any suggestion on how to work towards improving them. I am using torch nn.DataParallel to parallelize them.

nn.DistributedDataParallel with a single process per GPU is the recommended way as it’ll be faster than nn.DataParallel.

To increase the data loading performance, play around with the number of workers and select the “sweet spot” for your system. Also make sure the data is stored locally (on an SSD) and not loaded from the network or a HDD.

Will using nn.DistributedDataParallel will increase the GPU utilisation or IO utilisation.

I varied the number of workers from 0 to 32, and surprisingly the Disk IO utilisation is the same throughout. Also, time takes to run 1 epoch is fastest, when the number of workers is 0.

DistributedDataParallel should be more efficient than DataParallel and might thus increase the GPU utilization, but you would have to check it for your use case.

Multiple workers should do the same and it’s unexpected that loading the data in the main thread is faster than in the background.

I would recommend to profile the data loading separately and check the loading times using num_workers=0 and multiple workers to see why you are seeing a slowdown.