Hello guys,

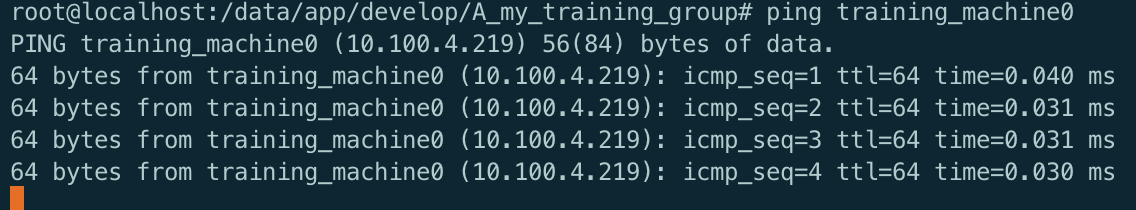

when I tried to use ip(10.100.4.219) or hostname(training_machine0) to init ddp, the connect timed out. But if I change the ip to 127.0.0.1(localhost), the init works. However, I can ping 10.100.4.219 and training_machine0 successfully.

python:3.8.12

GPU:RTX3090 * 8 * 2 nodes

torch:1.9.0+cu111

running in docker: with --network host

running command: python -m torch.distributed.run --nnodes=2 --rdzv_id=6666 --rdzv_backend=c10d --nproc_per_node=8 --rdzv_endpoint=training_machine0:29400 -m develop.A_my_training_group.cluster_train

Anything wrong with my settings or how can I fix it ?

More details: if I set the rdzv_endpoint to 127.0.0.1 or localhost, everything goes well, but if I set the

rdzv_endpoint to training_machine0 or 10.100.4.219(local node ip) , connection timed out.

ping training_machine0:

logs:

[INFO] 2022-01-10 14:53:21,159 run: Running torch.distributed.run with args: ['/opt/conda/lib/python3.8/site-packages/torch/distributed/run.py', '--nnodes=2', '--rdzv_id=6666', '--rdzv_backend=c10d', '--nproc_per_node=8', '--rdzv_endpoint=training_machine0:29400', '-m', 'develop.A_my_training_group.cluster_train']

[INFO] 2022-01-10 14:53:21,161 run: Using nproc_per_node=8.

*****************************************

Setting OMP_NUM_THREADS environment variable for each process to be 1 in default, to avoid your system being overloaded, please further tune the variable for optimal performance in your application as needed.

*****************************************

[INFO] 2022-01-10 14:53:21,161 api: Starting elastic_operator with launch configs:

entrypoint : develop.A_my_training_group.cluster_train

min_nodes : 2

max_nodes : 2

nproc_per_node : 8

run_id : 6666

rdzv_backend : c10d

rdzv_endpoint : training_machine0:29400

rdzv_configs : {'timeout': 900}

max_restarts : 3

monitor_interval : 5

log_dir : None

metrics_cfg : {}

[ERROR] 2022-01-10 14:54:21,182 error_handler: {

"message": {

"message": "RendezvousConnectionError: The connection to the C10d store has failed. See inner exception for details.",

"extraInfo": {

"py_callstack": "Traceback (most recent call last):\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/c10d_rendezvous_backend.py\", line 145, in _create_tcp_store\n store = TCPStore( # type: ignore[call-arg]\nRuntimeError: connect() timed out.\n\nThe above exception was the direct cause of the following exception:\n\nTraceback (most recent call last):\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py\", line 348, in wrapper\n return f(*args, **kwargs)\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/launcher/api.py\", line 214, in launch_agent\n rdzv_handler = rdzv_registry.get_rendezvous_handler(rdzv_parameters)\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/registry.py\", line 64, in get_rendezvous_handler\n return handler_registry.create_handler(params)\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/api.py\", line 253, in create_handler\n handler = creator(params)\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/registry.py\", line 35, in _create_c10d_handler\n backend, store = create_backend(params)\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/c10d_rendezvous_backend.py\", line 204, in create_backend\n store = _create_tcp_store(params)\n File \"/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/c10d_rendezvous_backend.py\", line 163, in _create_tcp_store\n raise RendezvousConnectionError(\ntorch.distributed.elastic.rendezvous.api.RendezvousConnectionError: The connection to the C10d store has failed. See inner exception for details.\n",

"timestamp": "1641797661"

}

}

}

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/c10d_rendezvous_backend.py", line 145, in _create_tcp_store

store = TCPStore( # type: ignore[call-arg]

RuntimeError: connect() timed out.

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/runpy.py", line 194, in _run_module_as_main

return _run_code(code, main_globals, None,

File "/opt/conda/lib/python3.8/runpy.py", line 87, in _run_code

exec(code, run_globals)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/run.py", line 637, in <module>

main()

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/run.py", line 629, in main

run(args)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/run.py", line 621, in run

elastic_launch(

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 116, in __call__

return launch_agent(self._config, self._entrypoint, list(args))

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/multiprocessing/errors/__init__.py", line 348, in wrapper

return f(*args, **kwargs)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/launcher/api.py", line 214, in launch_agent

rdzv_handler = rdzv_registry.get_rendezvous_handler(rdzv_parameters)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/registry.py", line 64, in get_rendezvous_handler

return handler_registry.create_handler(params)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/api.py", line 253, in create_handler

handler = creator(params)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/registry.py", line 35, in _create_c10d_handler

backend, store = create_backend(params)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/c10d_rendezvous_backend.py", line 204, in create_backend

store = _create_tcp_store(params)

File "/opt/conda/lib/python3.8/site-packages/torch/distributed/elastic/rendezvous/c10d_rendezvous_backend.py", line 163, in _create_tcp_store

raise RendezvousConnectionError(

torch.distributed.elastic.rendezvous.api.RendezvousConnectionError: The connection to the C10d store has failed. See inner exception for details.

Thank you!