I am using PyTorch with my Tegra X1 GPU, and running the NVIDIA command line tool nvprof to do some kernel profiling of DCNN layers.

I am seeing, as reported by nvprof, that the half precision (16-bit) workloads have just as many (actually slightly more) FLOPS than when the layers are run with full precision (32-bit). However, about half as much data is being fetched from off-chip. The kernel durations generally seem to be higher for 16-bit also.

My conclusion is that there is some sort of bit packing occuring with the torch.half() data.

Could someone help explain what is going on here?

Once advantage of FP16 operations is that the memory bandwidth will be reduced as only half of the data needs to be transferred to the register for the operation (and back to the global device memory).

Also, TensorCores can be used for suitable operations such as matrix multiplications and convolutions.

I’m not sure why the number of operations should decrease. Could you post a reference for this idea?

Thanks @ptrblck

It makes sense to me why there would be the same number of operations, but not more operations for 16-bit. It sounds like I have a misconception that 16-bit tensors on the host are packed onto 32-bit words to be sent onto GPU? I had hypothesized that because of this, there might be additional unpacking operations on chip that would account for the discrepancy between 16-bit and 32-bit

The are not packed to 32bit, as it would completely get rid of the advantage of the reduced memory bandwidth.

Depending on the operations you are running, you might get some additional operations e.g. for permutations. Some FP16 convolutions use TensorCores in the channels-last memory format, so that a permutation kernel will be called inside the cudnn kernel. However, the number of additional operations should be low compared to the actual workflow, and you should still see a speedup in FP16, if TensorCores are used.

Sorry @ptrblck I had to gather some more data.

The data I am seeing for workloads that are run at FP32 are slower than FP16.

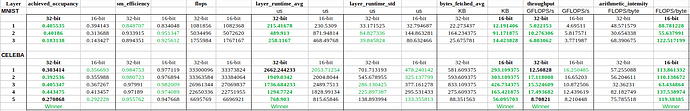

See runtime column below. Additional information: my group is interested in the per-layer performance of these workloads, and based off the information I’ve collected below I’m struggling to see what the advantage of half precision is: even if there is reduced bandwidth, it seems like the FP16 layers generally take longer to run, so the throughput isn’t as great

Could you shed some light on that? Thanks a lot!

(BTW the rows below are taken as an average over ~30 trials)

Another thing I just did a little more research on is that the device I’m using is the Tegra X1, and it looks like it doesn’t support TensorCore technology (although other versions do Tegra - Wikipedia)

If there are no TensorCores, what benefits does FP16 have?

I’m not deeply familiar with the Tegra family. If you don’t have TensorCores, then the benefit would be the lower memory footprint, which might be used to increase the batch size and thus the throughput.

However, depending on your use case, this might not be interesting (e.g. for an inference use case with a single sample).

If you are using cudnn, you might want to activate torch.backends.cudnn.benchmark = True at the beginning of the script to use the cudnn heuristics, which should select the fastest kernels.

Note that the first iteration for each new input shape would be slower due to the benchmarking.

Let me know, if this reduces the FP16 latency.