Hello,

I’ve been using Tensorboard for a while to plot different scalars during training. Now I want to do hyperparameter tuning in an organised way, so I’m trying to use HParams correctly.

I plot scalars like this and it works well:

writer = SummaryWriter('runs/' + run_name)

...

...

# I do this every epoch

writer.add_scalar('Training/CE_Loss', loss_CE, epoch)

writer.add_scalar('Validation/Accuracy', validation_acc, epoch)

However, I’m seeing some weird behaviour after adding the hyperparameters. I have a dictionary with all the parameters of my experiment, plus an extra key containing the best validation accuracy. I use that dictionary to create the HParams table and looks fine on TensorBoard:

writer.add_hparams(experiment_data, {'Validation/Accuracy': experiment_data['VALIDATION_ACCURACY_BEST']})

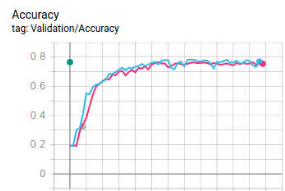

It also adds a scalar (the green dot) to the existing plot:

The problem is that it also creates something that looks like a new run, which is very annoying because I get many repeated colours very quickly and makes it difficult to compare between experiments:

I see a LOT of tutorials on TB+Pytorch, but none of them (not even the official one!) talks about HParams.

Am I doing something wrong? How could I avoid this?

Thanks