@Unity05

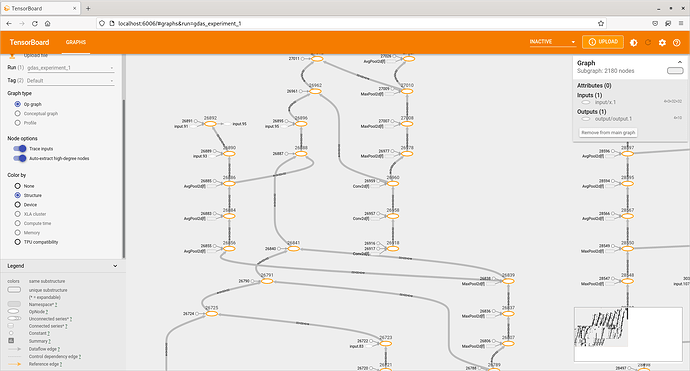

Error occurs, No graph saved

Traceback (most recent call last):

File "/home/phung/PycharmProjects/beginner_tutorial/gdas.py", line 817, in <module>

ltrain = train_NN(forward_pass_only=0)

File "/home/phung/PycharmProjects/beginner_tutorial/gdas.py", line 574, in train_NN

writer.add_graph(graph, NN_input)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/utils/tensorboard/writer.py", line 736, in add_graph

self._get_file_writer().add_graph(graph(model, input_to_model, verbose, use_strict_trace))

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/utils/tensorboard/_pytorch_graph.py", line 295, in graph

raise e

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/utils/tensorboard/_pytorch_graph.py", line 289, in graph

trace = torch.jit.trace(model, args, strict=use_strict_trace)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/jit/_trace.py", line 741, in trace

return trace_module(

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/jit/_trace.py", line 958, in trace_module

module._c._create_method_from_trace(

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1090, in _slow_forward

result = self.forward(*input, **kwargs)

File "/home/phung/PycharmProjects/beginner_tutorial/gdas.py", line 353, in forward

y = self.cells[c].nodes[n].connections[cc].edges[e].forward_f(x)

File "/home/phung/PycharmProjects/beginner_tutorial/gdas.py", line 112, in forward_f

return self.f(x)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/nn/modules/module.py", line 1090, in _slow_forward

result = self.forward(*input, **kwargs)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/nn/modules/conv.py", line 446, in forward

return self._conv_forward(input, self.weight, self.bias)

File "/home/phung/PycharmProjects/venv/py39/lib/python3.9/site-packages/torch/nn/modules/conv.py", line 442, in _conv_forward

return F.conv2d(input, weight, bias, self.stride,

RuntimeError: Cannot insert a Tensor that requires grad as a constant. Consider making it a parameter or input, or detaching the gradient