I’m using a model based on CNN backbone and Transformer as long term feature aggregator for video kind of dataset. The model performs well on same domain and I want to boost it’s performance using self-supervised learning approach utilising a huge dataset that I have.

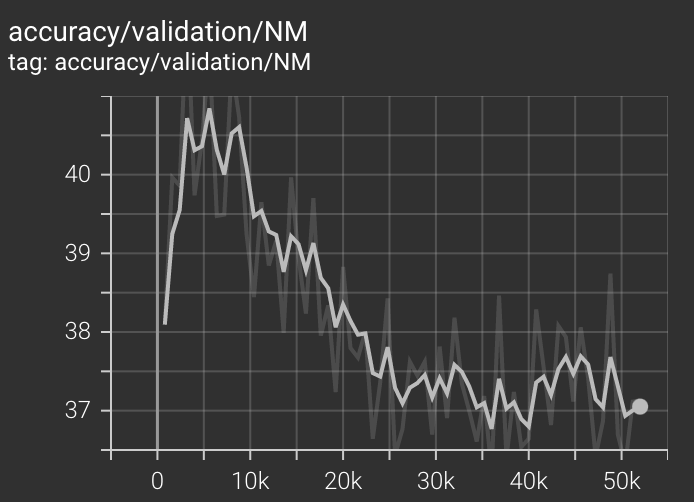

The accuracy on the smaller dataset is about 90%. When I try to pretrain this model on the larger dataset in a self-supervised fashion (using contrastive loss), initially the accuracy (on direct testing on the smaller dataset) is around 40% which is quite acceptable because of the domain gap in the two datasets, but instead of increasing, it decreases over epochs quite steadily until it reaches somewhere around 35%. I need some suggestions about how can I address this problem and possibly see a usual increasing graph for the accuracy.