Search before you ask. I saw a lot of related questions, but none of them were complete enough, so I wanted to provide a comprehensive summary and in-depth discussion here.

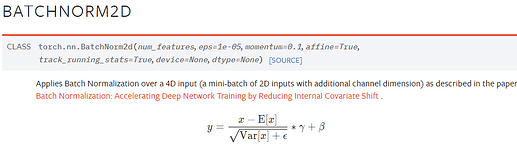

- First we should clarify how the BN layer is calculated:

reference:BatchNorm2d — PyTorch master documentation

The BN layer will calculate the mean and val in each batch of input data and then normalize them. And move through γ and β to the new data distribution where γ and β are learnable parameters. - According to my own experiments, setting eval mode on the BN layer does not prevent the BN layer parameters from being updated.Just different behavior!

This is also reflected in many of the responses, eg.

What is the meaning of the training attribute?model.train() and model.eval()? - #2 by ptrblck

Does gradients change in Model.eval()? - #2 by ptrblck

What does model.eval() do for batchnorm layer? - #2 by smth - problem:

In model.train(), we use the input data’s statistical features (mean and var) to normalize and use the training γ and β to move to the new data distribution.

In model.eval(), we use the trained γ and β statistical features for normalization. Right?

I’m not sure if my insights are correct, feel free to correct me!