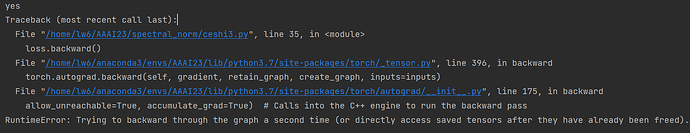

When I tried to use torch.nn.utils.parametrizations.spectral_norm in GRUs, I found it raises the error “Trying to backward through the graph a second time” in the second mini-batch iteration. While this problem does not arise when I put the model on CPU or just use torch.nn.utils.spectral. It is really strange.

The codes are as follows:

import torch

import torch.nn as nn

from torch.nn.utils.parametrizations import spectral_norm

torch.manual_seed(111)

torch.cuda.manual_seed(111)

class KKK(nn.Module):

def init(self):

super(KKK, self).init()

self.encoder =spectral_norm(nn.GRU(input_size=100,

hidden_size=100,

num_layers=1,

batch_first=True,

bidirectional=True),name=‘weight_ih_l0’)

self.dropout = nn.Dropout(0.2)

self.fc = spectral_norm(nn.Linear(200, 2))

def forward(self, inputs):

outputs, _ = self.encoder(inputs)

outputs = outputs[:,0,:]

logits = self.fc(self.dropout(outputs))

return logits

loss_func=nn.CrossEntropyLoss()

input = torch.randn(3, 10,100, requires_grad=True).to(‘cuda:1’)

target = torch.empty(3, dtype=torch.long).random_(2).to(‘cuda:1’)

model=KKK().to(“cuda:1”)

opt=torch.optim.Adam(model.parameters(),lr=0.001)

model.train()

for i in range(10):

opt.zero_grad()

lg=model(input)

loss=loss_func(lg,target)

loss.backward()

print(‘yes’)

opt.step()