Problem

During my application, a strange bug is that my model works well with single GPU but fails in multi-GPUs by:

RuntimeError: Gather got an input of invalid size: got [24, 10, 448,448], but expected [24, 11, 448,448] (gather at /pytorch/torch/csrc/cuda/comm.cpp:239

My input size is [48,3,448,448], and two GPUs are used. Thus, it is ok to split 24 images into each gpu, but it is strange why exists 10 and 11 channels?

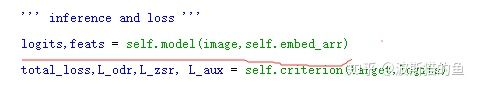

After debug, the problem is found out:

The self.embed_arr is an input semantic labels, whose size is [21,300].

self.embed_arr will affect the image feature channels.

Under single GPU setting, each image will meet a same self.embed_arr, thereby having the same image channels.

However, under multi-GPUs settings, self.embed_arr will be split into multi-parts, e.g., [10,30] and [11,30], thereby leading to different image channels and a bug during feature gathering.

( If self.embed_arr has a size of [20,300], this problem will not appear, and I may think that the bad performance is attributed to my algorithm, which is terrible!)

Solution

An alternative solution is to duplicate the input so that the scattered inputs in each GPU is the same by:

self.embed_arr = self.embed_arr.repeat(len(range(torch.cuda.device_count())),1)

Suggest

So, I suggest pytorch to add a function that can ontrol which inputs should be scattered to different GPUs. Or, is there any better solutions?