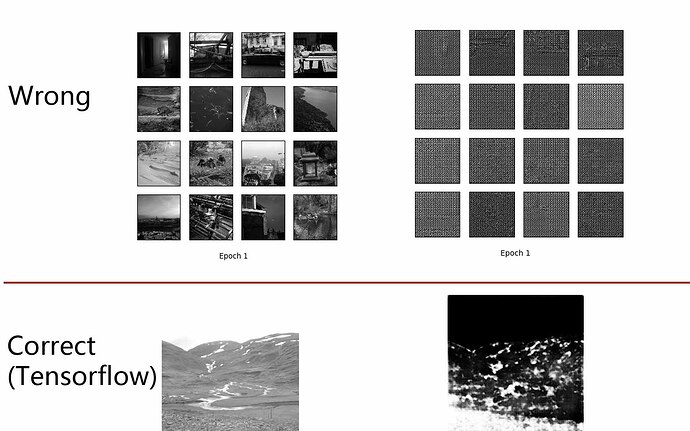

Just as the result shows, they consists of grain noises.

This is a task of generating “probability map” using a GAN with a U-Net style generator. In a sense, This task can be explained as using the U-Net structured generator of GAN to generate segmentation results.

Actually, the output is right when I run the tensorflow version that posted by the author of the paper: [An Embedding Cost Learning Framework Using GAN | IEEE Journals & Magazine | IEEE Xplore](An Embedding Cost Learning Framework Using GAN. And here is his tensorflow code on github:https://github.com/JianhuaYang001/spatial-image-steganography

The upper column are the inputs and my incorrect outputs; And the lower column are the inputs and its corresponding output(correct) by running the tensorflow code:

Here is the U-net based generator:

class Generator_Unet(nn.Module):

def __init__(self, input_nc=1, out_nc=1):

super(Generator_Unet, self).__init__()

self.input_nc = input_nc

self.out_nc = out_nc

self.inc = Down(self.input_nc, 16, inc=True)

self.down1 = Down(16, 32)

self.down2 = Down(32, 64)

self.down3 = Down(64, 128)

self.down4 = Down(128, 128)

self.down5 = Down(128, 128)

self.down6 = Down(128, 128)

self.down7 = Down(128, 128)

self.up1 = Up(128, 256, self.bil, dropout=True)

self.up2 = Up(256, 256, self.bil, dropout=True)

self.up3 = Up(256, 256, self.bil, dropout=True)

self.up4 = Up(256, 256, self.bil)

self.up5 = Up(256, 128, self.bil)

self.up6 = Up(128, 64, self.bil)

self.up7 = Up(64, 32, self.bil)

self.outc = OutConv(32, self.out_nc)

def forward(self, input):

x1 = self.inc(input)#(B, 16, 128, 128)

x2 = self.down1(x1)#(B, 32, 64, 64)

x3 = self.down2(x2)#(B, 64, 32, 32)

x4 = self.down3(x3)#(B, 128, 16, 16)

x5 = self.down4(x4)#(B, 128, 8, 8)

x6 = self.down5(x5)#(B, 128, 4, 4)

x7 = self.down6(x6)#(B, 128, 2, 2)

x8 = self.down7(x7)#(B, 128, 1, 1)

x = self.up1(x8, x7)#(B, 256, 2, 2)

x = self.up2(x, x6)#(B, 256, 4, 4)

x = self.up3(x, x5)#(B, 256, 8, 8)

x = self.up4(x, x4)#(B, 256, 16, 16)

x = self.up5(x, x3)#(B, 128, 32, 32)

x = self.up6(x, x2)#(B, 64, 64, 64)

x = self.up7(x, x1)#(B, 32, 128, 128)

prob = self.outc(x)#(B, 1, 256, 256)

return prob

Here is the modules of it:

class LRelu(nn.Module):

def __init__(self, alpha=0.2):

super(LRelu, self).__init__()

self.alpha=alpha

def forward(self, input):

output = F.relu_(input) - self.alpha*F.relu_(-input)

return output

class DownConv(nn.Module):

def __init__(self, in_channels, out_channels, mid_channels=None, kernel_size=3, relu=False, lrelu=False):

super(DownConv, self).__init__()

if not mid_channels:

mid_channels = out_channels

ker_size = kernel_size

if relu:

self.down_conv = nn.Sequential(

nn.ReLU(inplace=True),

nn.Conv2d(in_channels, mid_channels, ker_size, stride=2, padding=1),

nn.BatchNorm2d(mid_channels),

)

elif lrelu:

self.down_conv = nn.Sequential(

LRelu(),

nn.Conv2d(in_channels, mid_channels, ker_size, stride=2, padding=1),

nn.BatchNorm2d(mid_channels),

)

else:

self.down_conv = nn.Sequential(

nn.Conv2d(in_channels, mid_channels, ker_size, stride=2, padding=1),

nn.BatchNorm2d(mid_channels),

)

def forward(self, x):

return self.down_conv(x)

class Down(nn.Module):

def __init__(self, in_channels, out_channels, lrelu=True, inc=False):

super(Down, self).__init__()

if inc:

self.conv_block = nn.Sequential(

DownConv(in_channels, out_channels, lrelu=False, relu=False),

)

elif lrelu:

self.conv_block = nn.Sequential(

DownConv(in_channels, out_channels, lrelu=True),

)

else:

self.conv_block = nn.Sequential(

DownConv(in_channels, out_channels, relu=True),

)

def forward(self, x):

return self.conv_block(x)

class Up(nn.Module):

def __init__(self, in_channels, out_channels, bilinear=True, dropout=False):

super(Up, self).__init__()

self.up = nn.Sequential(

nn.ReLU(inplace=True),

nn.ConvTranspose2d(in_channels , out_channels//2, kernel_size=4, stride=2, padding=1, ),

)

self.dp_fg = dropout

self.bn = nn.BatchNorm2d(out_channels//2)

def forward(self, x1, x2):

x1 = self.up(x1)

x1 = self.bn(x1)

if self.dp_fg:

x1 = F.dropout(x1, p=0.5, training=True)

# input is CHW

diffY = x2.size()[2] - x1.size()[2]

diffX = x2.size()[3] - x1.size()[3]

x1 = F.pad(x1, [diffX // 2, diffX - diffX // 2,

diffY // 2, diffY - diffY // 2])

x = torch.cat([x1, x2], dim=1)

return x

Here is the main training file:

def weights_init(net):

for m in net.modules():

if isinstance(m, nn.Conv2d):

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif isinstance(m, nn.ConvTranspose2d):

nn.init.normal_(m.weight.data, 0.0, 0.02)

elif isinstance(m, nn.BatchNorm2d):

nn.init.normal_(m.weight.data, 1.0, 0.02)

m.bias.data.zero_()

class ASGAN:

def __init__(self, device, img_nc=1, lr=0.0001, payld=0.4, bilinear=False):

self.device = device

self.img_nc = img_nc

self.lr = lr

self.payld = payld

self.bilinear = bilinear

self.netG = Generator_Unet(self.img_nc, bilinear=self.bilinear).to(device)

self.netDisc = Discriminator_steg(self.img_nc).to(device)

# initialize all weights

self.netG.apply(weights_init)

self.netDisc.apply(weights_init)

# initialize optimizers

self.optimizer_G = torch.optim.Adam(self.netG.parameters(), self.lr)

self.optimizer_D = torch.optim.Adam(self.netDisc.parameters(), self.lr)

def train_batch(self, cover, TANH_LAMBDA=60, D_LAMBDA=1, Payld_LAMBDA=1e-7):

# optimize D

for i in range(1):

prob_pred = self.netG(Variable(cover)).cuda()

data_noise = np.random.rand(prob_pred.shape[0], prob_pred.shape[1], prob_pred.shape[2], prob_pred.shape[3])

tensor_noise = torch.from_numpy(data_noise).float().to(self.device)

modi_map = 0.5*(torch.tanh((prob_pred+2.*tensor_noise-2)*TANH_LAMBDA) - torch.tanh((prob_pred-2.*tensor_noise)*TANH_LAMBDA))

stego = (cover*255 + modi_map)/255.

data = torch.stack((cover, stego)) ###detach

data_shape = list(data.size())

data = data.reshape(data_shape[0] * data_shape[1], *data_shape[2:])

data_group = data.cuda()

label_zeros = np.zeros(cover.shape[0])

label_ones = np.ones(cover.shape[0])

label = np.stack((label_zeros, label_ones))

label = torch.from_numpy(label).long()

label = Variable(label).cuda()

label_group = label.view(-1)

self.optimizer_D.zero_grad()

pred_D = self.netDisc(data_group.detach())

criterion = torch.nn.CrossEntropyLoss().cuda()

loss_D = criterion(pred_D, label_group)

loss_D.backward(retain_graph=True)

self.optimizer_D.step()

# optimize G

for i in range(1):

img_size = cover.shape[2]

batch_size = cover.shape[0]

self.optimizer_G.zero_grad()

prob_chanP = prob_pred / 2.0 + 1e-5

prob_chanM = prob_pred / 2.0 + 1e-5

prob_unchan = 1 - prob_pred + 1e-5

cap_entropy = torch.sum( (-prob_chanP * torch.log2(prob_chanP)

-prob_chanM * torch.log2(prob_chanM)

-prob_unchan * torch.log2(prob_unchan) ),

dim=(1,2,3)

)

payld_gen = torch.sum((cap_entropy), dim=0) / (img_size * img_size * batch_size)

cap = img_size * img_size * self.payld

loss_entropy = torch.mean(torch.pow(cap_entropy - cap, 2), dim=0)

loss_G = D_LAMBDA * (-loss_D) + Payld_LAMBDA * loss_entropy

loss_G.backward()

self.optimizer_G.step()

return loss_D.data[0], loss_G.data[0]

def train(self, train_dataloader, epochs):

data_iter = iter(train_dataloader)

sample_batch = data_iter.next()

data_fixed = sample_batch['img'][0:]

data_fixed = Variable(data_fixed.cuda())

noise_fixed = torch.randn((data_fixed.shape[0], data_fixed.shape[1], data_fixed.shape[2], data_fixed.shape[3]))

noise_fixed = Variable(noise_fixed.cuda())

for epoch in range(1, epochs+1):

loss_D_sum = 0

loss_G_sum = 0

for i, data in enumerate(train_dataloader, start=0):

images = data['img']

images = images.to(self.device)

loss_D_batch, loss_G_batch = self.train_batch(images)

loss_D_sum += loss_D_batch

loss_G_sum += loss_G_batch

# print statistics

num_batch = len(train_dataloader)

print("epoch %d:\nloss_D: %.6f, loss_G: %.6f" %

(epoch, loss_D_sum/num_batch, loss_G_sum/num_batch))

# save generator

if epoch%10==0:

netG_file_name = models_path + 'netG_epoch_' + str(epoch) + '.pth'

torch.save(self.netG.state_dict(), netG_file_name)

self.netG.eval()

modi_map_fixed = 0.5*(torch.tanh((self.netG(data_fixed)+2.*noise_fixed-2)*60) - torch.tanh((self.netG(data_fixed)-2.*noise_fixed)*60))

stego_fixed = (data_fixed*255 + modi_map_fixed)/255.

show_result(epoch, self.netG(data_fixed), save=True, path=img_prob_train_path+str(epoch)+'prob.png')

show_result(epoch, stego_fixed, save=True, path=img_prob_train_path+str(epoch)+'steg.png')

show_result(epoch, modi_map_fixed, save=True, path=img_prob_train_path+str(epoch)+'modi.png')

show_result(epoch, data_fixed, save=True, path=img_prob_train_path+str(epoch)+'cover.png')

self.netG.train()

I am so confusing since I debug for 2 days but still can not get the correct-style outputs.

Hope to get some selfless help, much appreciated!