I follow the tutorial and use the same code but it comes up a problem

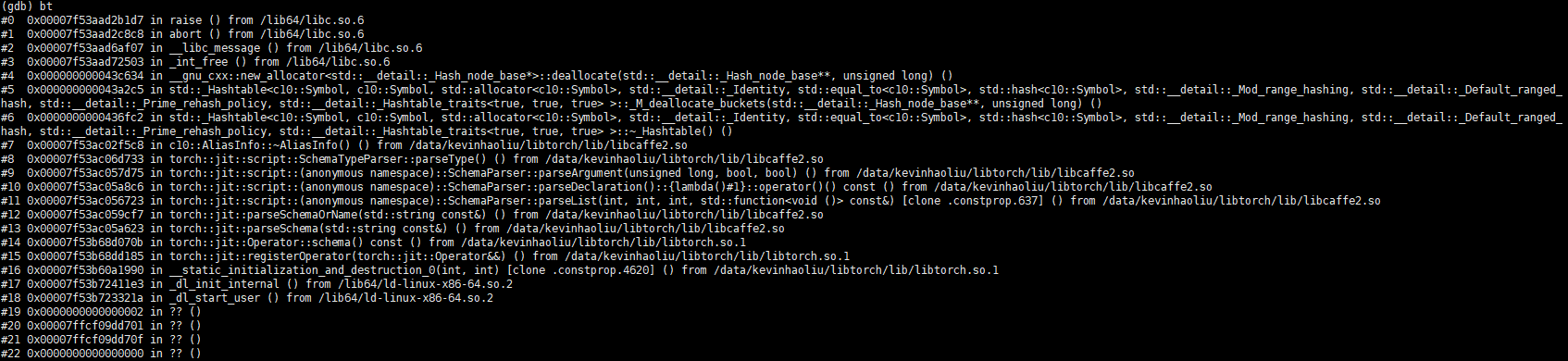

Could you post the complete stack trace, if you have it?

You are apparently trying to free something which isn’t pointing to a “freeable” memory address.

But I only run the same code by following the tutorial.

I update the g++ from 4.8.5 to 4.9.2 and it works.

I update the g++ too, but still have the same problem.

What’s your g++ version?

gcc version 4.9.2 20150212 (Red Hat 4.9.2-6) (GCC)

Sorry, I re-compile the project and no more free() problem.

It is another one

operation failed in interpreter:

op_version_set = 0

def forward(self,

input: Tensor) -> Tensor:

if bool(torch.gt(torch.sum(input), 0)):

output = torch.mv(self.weight, input)

~~~~~~~~ <— HERE

else:

output_2 = torch.add(self.weight, input, alpha=1)

output = output_2

return output

Abandon (core dumped)

Could you please show me your C++ code?

I use the tutorial code :

#include <torch/script.h> // One-stop header.

#include

#include

int main(int argc, const char* argv[]) {

if (argc != 2) {

std::cerr << “usage: example-app \n”;

return -1;

}

// Deserialize the ScriptModule from a file using torch::jit::load().

std::shared_ptrtorch::jit::script::Module module = torch::jit::load(argv[1]);

assert(module != nullptr);

std::cout << “ok\n”;

/ Create a vector of inputs.

std::vectortorch::jit::IValue inputs;

inputs.push_back(torch::ones({1, 3, 224, 224}));

// Execute the model and turn its output into a tensor.

at::Tensor output = module->forward(inputs).toTensor();

std::cout << output.slice(/dim=/1, /start=/0, /end=/5) << ‘\n’;

}

And I use this code to create the “model.pt”

import torch

import torchvision

An instance of your model.

model = torchvision.models.resnet18()

An example input you would normally provide to your model’s forward() method.

example = torch.rand(1, 3, 224, 224)

print(example)

Use torch.jit.trace to generate a torch.jit.ScriptModule via tracing.

traced_script_module = torch.jit.trace(model, example)

traced_script_module.save(“model.pt”)

Thank you. I have the version 1.1.0 but still have the same problem with “torch.mv” function :

terminate called after throwing an instance of ‘std::runtime_error’

what():

mv: Expected 1-D argument vec, but got 4-D (check_1d at /pytorch/aten/src/ATen/native/LinearAlgebra.cpp:143)

frame #0: std::function<std::string ()>::operator()() const + 0x11 (0x7f30c5a46441 in /home/pixur/Documents/Zaynab/TestLibtorch/libtorch/lib/libc10.so)

I didn’t mke any chnge in the code, I just follow the tutorial

I used the wrong model.pt. It working now. Thank you!