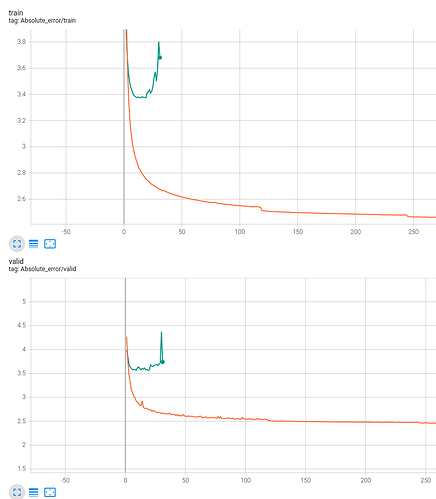

I trained a VAE on a dataset containing 1k images. The VAE itself is convolutional, downsamples 256x256 rgb images four times before reconstruction and uses both relu and BatchNorm Layers as well as ResNet-like Skip Connection prior and after the bottleneck. Input is normalized to unit scale and no data augmentation takes place. The modal consists in total roughly of about 5e6 parameters and the visual inspection of the reconstructed images look decent enough. This is represented for both training and validation runs by the orange curve. No overfitting occurs as far as I can tell. However, if I use the entire dataset, i.e. ~ 35k training images and 5k validation images, the same model performs like shown by the green curve. Taking into account only the lower validation plot, I had said, this is overfitting, although it would have struck me as odd, that the same model that performed decently on the 1k images dataset now overfits on a much larger dataset. But also the training loss shows a clear inflection point around epoch 15.

Can somebody tell me a likely source of error here? Is the models capacity not large enough to sufficiently capture the complexity of the data? I dont think so, since I am using a sota convolutional VAE used by OpenAI for a dataset comprising millions of images

Any help would be appreciated. Thank you in advance