Hello everyone,

the question is more about deep learning, than about pytorch specifically.

What is the best way to build a many-to-many timeseries model for numerical sequences of constant length p.e. Vehicle Trajectory?

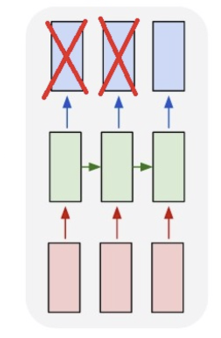

If I take the last timestep of the encoder, like this…

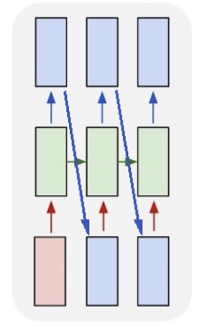

…what would be the best way to generate a sequence of multiple timesteps, using the last hidden state as the new input? I’ve seen differen versions like this one…

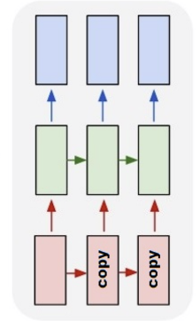

and this one …

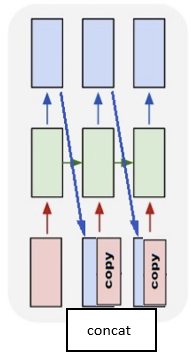

Or a combination of the two, where you concat the last timestep of the encoder ouptut with every timestep of the decoder output…

Feel free to suggest a different / better way.

Also, what is a better choice for labels?

- The absolute future values

- The change per time of the future values → integral of the model output to get prediction

I’m working on all of these variations right now. I was just curious if there is already a “go to” solution for my problem.

Thanks in advance,

Arthur