Creation of a LongTensor with size < 74240 yields a tensor filled with random long int noise. Above 74240 yields a tensor filled with uniform 0s. Any idea why this is?

Python 3.6.5 | packaged by conda-forge | (default, Apr 6 2018, 13:44:09)

Type 'copyright', 'credits' or 'license' for more information

IPython 6.4.0 -- An enhanced Interactive Python. Type '?' for help.

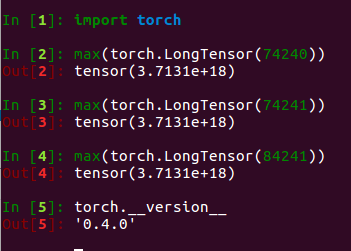

In [1]: import torch

In [2]: max(torch.LongTensor(74240))

tensor(9222708909033422584)

In [3]: max(torch.LongTensor(74241))

tensor(0)