My code works when disable torch.cuda.amp.autocast.

But when I enable torch.cuda.amp.autocast, I found that the normalization step overflows (float16)

x = x - x.mean(), where x.shape = [5665, 48] and x.mean() returns nan.

Is there any way to solve this problem?

I cannot reproduce the issue by trying to create a tensor in a similar range:

x = torch.randn(5665, 48, device='cuda', dtype=torch.float16) * 100.

print(x)

with torch.cuda.amp.autocast():

out = x - x.mean()

print(torch.isnan(out).any())

Output:

tensor([[ 68.5000, 57.2188, -15.8438, ..., -59.6562, -81.4375,

-58.6562],

[ 48.5938, -110.9375, 112.6250, ..., -133.3750, 12.9766,

-29.9062],

[ -9.2812, -36.3125, -98.5000, ..., 139.5000, -30.3438,

59.9062],

...,

[ 114.8750, 89.8125, 23.7500, ..., -150.6250, -46.2500,

-32.7500],

[ 42.2500, -17.6406, 103.8750, ..., -55.9062, 38.0312,

-62.6875],

[ 53.4688, 187.7500, 44.3125, ..., 122.9375, 40.6875,

-123.4375]], device='cuda:0', dtype=torch.float16)

tensor(False, device='cuda:0')

I use torch.save(tensor, 'x.pt') to save my tensor.

Could you analyze the overflow reason for me? I don’t know whether it’s allowed to share links to download files.

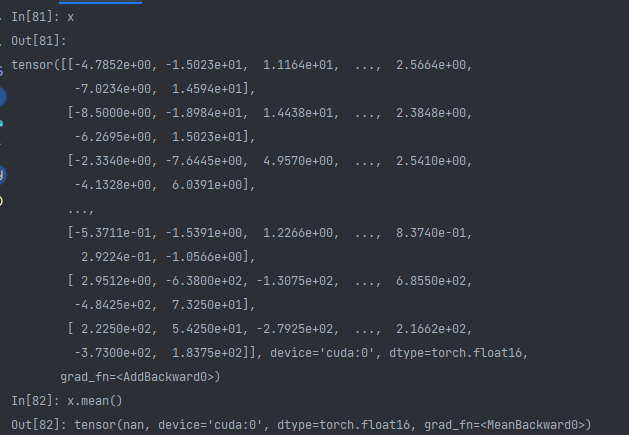

My dumped tensor x:

I think the tensor has dtype=torch.half.

Unfortunately, I cannot download the file (the site claims it’s downloading but nothing happens).

I also cannot reproduce the issue using the max. values in float16:

x = torch.ones(5665, 48, device='cuda', dtype=torch.float16) * 65504

print(x)

with torch.cuda.amp.autocast():

out = x - x.mean()

print(torch.isnan(out).any())

> tensor(False, device='cuda:0')

x.mean() output nan, because it has both negative and positive values, which will accumulate to Inf and -Inf and that’s why there’s nan in the tensor. Otherwise, at worst, there will be Inf instead of nan.

Could you try it again with this link? The link above is fine, I can download it without any problem.

https://cowtransfer.com/s/593ab3ca8d3946

No, that’s not the case as seen in my example, as I’m already using the max. float16 values and they are not accumulating to Inf (internally float32 will be used so the overflow will not happen).

Thanks for the new link as I was able to download the data.

Your input data already contains Inf values, which will create the NaN output in x.mean():

print(torch.isinf(x).any())

> tensor(True, device='cuda:0')