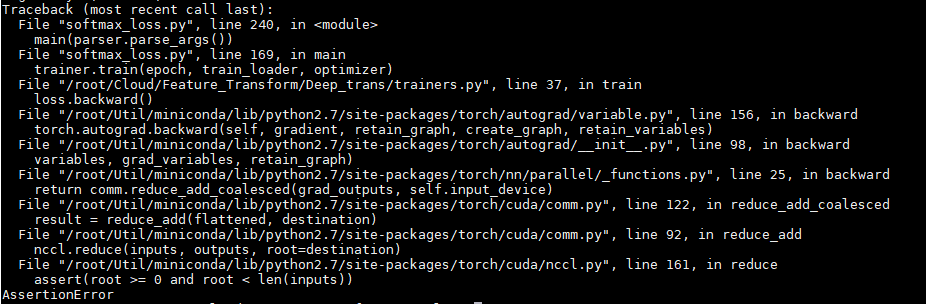

when I use the torch.nn.DataParallel use train the network on multi-gpu,an error happened:

when I set model = torch.nn.DataParallel(net,device_ids=[0,1]) ,there are no errors, but when I set device_ids = [2,3] or other situation which doesn’t include the ‘0’ device, there is an error where the loss.backward(), I wonder if anyone run into this problem. How to solve this?