Hello, I’m trying to run code from this repo https://github.com/peizhaoli05/EDA_GNN, however I noticed something strange with torch.nn.Linear. If I use the requierment.txt specified version of torch (0.4.1), the code crash because of a mismatch in dimension between tensor temp_crop (shape 1x1792) and the linear layer crop_fc1 (shape 128x64). However, when I use newer torch version (1.10.0), the code runs fine and output a tensor with shape 1x64. Is there any justification or documentation for this behaviour?

I cannot reproduce the issue and get a valid shape mismatch:

x = torch.randn(1, 1792)

lin = nn.Linear(128, 64)

out = lin(x)

> RuntimeError: mat1 and mat2 shapes cannot be multiplied (1x1792 and 128x64)

lin.cuda()

out = lin(x.cuda())

> RuntimeError: mat1 and mat2 shapes cannot be multiplied (1x1792 and 128x64)

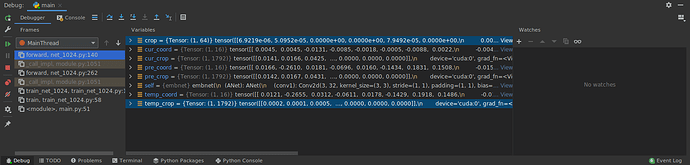

That’s weird, I also expect that behaviour, but the code in that git has that issue when I run on my machine and also Colab. Maybe it’s something specific to that repo. Below is a screenshot of the problem I’ve described on my machine and output before and after nn.Linear.forward() call

python version : 3.7.12 (default, Sep 10 2021, 00:21:48) [GCC 7.5.0]

torch version : 1.9.0+cu111

cudnn version : 8005

# ------------------------- Options -------------------------

mode : 0 # 0 for debug

description : net_1024

device : "3"

epochs : 40000

gammas : [0.1, 0.1, 0.1]

schedule : [10000, 20000, 30000]

learning_rate : 0.001

optimizer : Adam

entirety : True

model : net_1024

# ----------------------- God Save Me -----------------------

save_model : True

dampening : 0.9

lr_patience : 10

momentum : 0.9

decay : 0.0005

start_epoch : 0

print_freq : 10

checkpoint : 10

n_threads : 2

result : result

# --------------------------- End ---------------------------

-------------------------- initialization --------------------------

initializing sequence 02 ...

initialize 02 done

------------------------------ done --------------------------------

training final

/usr/local/lib/python3.7/dist-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /pytorch/c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

before torch.Size([1, 1792])

after torch.Size([1, 64])

Here’s a screenshot of my Pycharm debugger showing the issue

You are using 1.9.0, which had a missing shape check after porting some internal kernels to the structured kernel implementations, so you would need to update PyTorch.

In your initial post you’ve mentioned PyTorch 1.10.0, which should not suffer from this issue anymore.

Thank you for your response. I’ve tried using 1.10.0 and the problem is indeed resolved. I’m sorry for wrongly specifying the version number. I wish you all a good day

Thanks for checking and good to hear it’s properly working in the current release!