Hi, i am trying to train the network with video data. I build a dataloader which give [batch, frame, C, H, W].

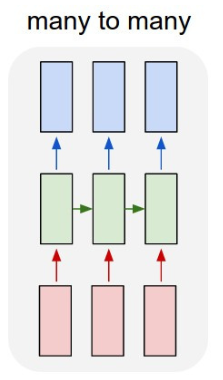

I am trying to build a cnn-lstm based network, and regress the certain value from each frame. so, it’s basically many-to-many task like below.

as you know, i cannot use conventional convolution for input data [batch, frame, C, H, W]. do i have to use model for each frame? I have no idea how i can implement it… any suggestions or well-organized links?