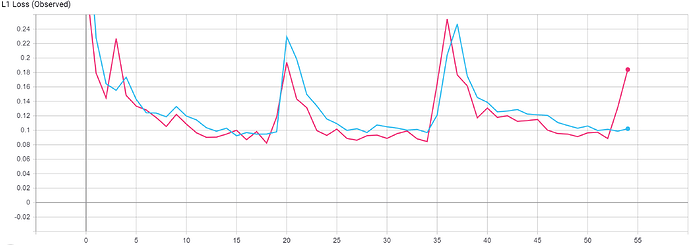

I am trying to optimize a model using ADAM optimizer. The initial learning rate is set to 0.01 with weight decay of 0.00001. I am reducing the learning rate after every 10th epoch by a factor of 0.1. The model optimizes for few epoch and then suddenly jumps. This process repeats for few times. What could be the reason for this?