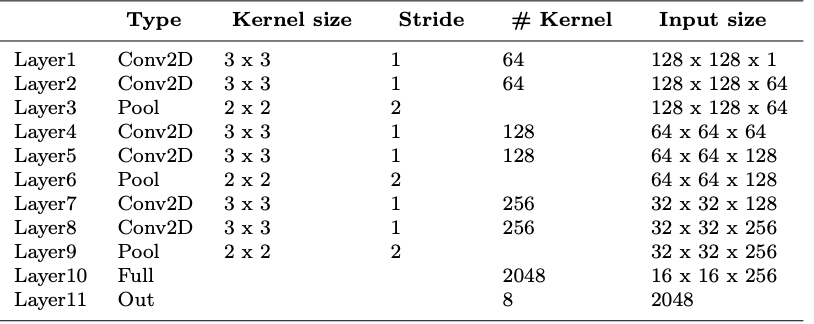

So I’m new to Deep Learning and I’m trying to create the following CNN architecture on PyTorch:

This is what I have for code so far:

import torch.nn as nn

from torch.autograd import Variable

import torch.nn.functional as F

class CNet(nn.Module):

def __init__(self, num):

# Input x is (128, 128, 1

super(HeartNet, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1),

nn.ELU(inplace=True),

nn.BatchNorm2d(64, eps=0.001),

nn.Conv2d(3, 64, kernel_size=3, stride=1),

nn.ELU(inplace=True),

nn.BatchNorm2d(64, eps=0.001),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(3, 128, kernel_size=3, stride=1),

nn.ELU(inplace=True),

nn.BatchNorm2d(128, eps=0.001),

nn.Conv2d(3, 128, kernel_size=3, stride=1),

nn.ELU(inplace=True),

nn.BatchNorm2d(128, eps=0.001),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(3, 256, kernel_size=3, stride=1),

nn.ELU(inplace=True),

nn.BatchNorm2d(256, eps=0.001),

nn.Conv2d(3, 256, kernel_size=3, stride=1),

nn.ELU(inplace=True),

nn.BatchNorm2d(256, eps=0.001),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.classifier = nn.Sequential(

nn.Dropout(0.5),

nn.Linear(16*16*256, 2048),

nn.ELU(inplace=True),

nn.BatchNorm2d(2048, eps=0.001)

nn.Linear(2048, 8)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size(0), 16 * 16 * 256)

x = self.classifier(x)

return x

Am I on the right track here? I also found a keras equivalent for the architecture and I was confused on how to translate the last portion of the fully connected layer into PyTorch, this is how it looks like in Keras below:

model.add(MaxPool2D(pool_size=(2, 2), strides= (2,2)))

model.add(Flatten())

model.add(Dense(2048))

model.add(keras.layers.ELU())

model.add(BatchNormalization())

model.add(Dropout(0.5))

model.add(Dense(7, activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

Any help or hints in the right direction is helpful, thank you!