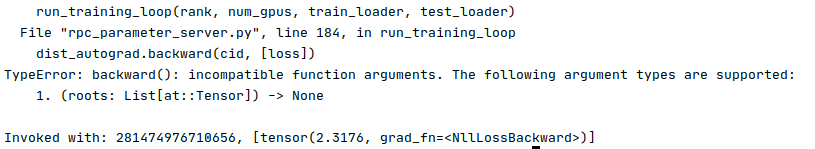

Hi, I am using the code from IMPLEMENTING A PARAMETER SERVER USING DISTRIBUTED RPC FRAMEWORK tutorial, which should be straightforward to implement. However, I am receiving the TypeError (see following screenshot) while executing dist_autograd.backward(cid, [list]) and can not get rid of it after trying a lot. Is this because the tensors need to be scalar?

Your help will be much appreciated.

Hey @Khairul_Mottakin, looks like you are using PyTorch v1.4? The cid arg is added in v1.5 IIRC. Could you please try upgrade to the latest release v1.6?

cc @rvarm1

Yah, it is working locally after updating pytorch version. Many many thanks @mrshenli and @rvarm1 for your contribution and helping us to implement distributed system in our own ways.

While started training from remote worker, it says “RuntimeError: […/third_party/gloo/gloo/transport/tcp/pair.cc:769] connect [127.0.1.1]:14769: Connection refused”. The same issue had been raised by @Oleg_Ivanov in here. I am not sure whether it has been solved. Should I use "os.environ[‘GLOO_SOCKET_IFNAME’]=‘nonexist’ " ?

Can you suggest any tutorial for building such smaller cluster (2-3 remote workers with 1 master PS) to implement the Parameter Server using RPC of PyTorch?

Thank you very much once again.

Hey @Khairul_Mottakin, can you try printing out GLOO_SOCKET_IFNAME, MASTER_ADDR, and MASTER_PORT immediately before where init_rpc is called on all processes? And args did you pass to init_rpc?