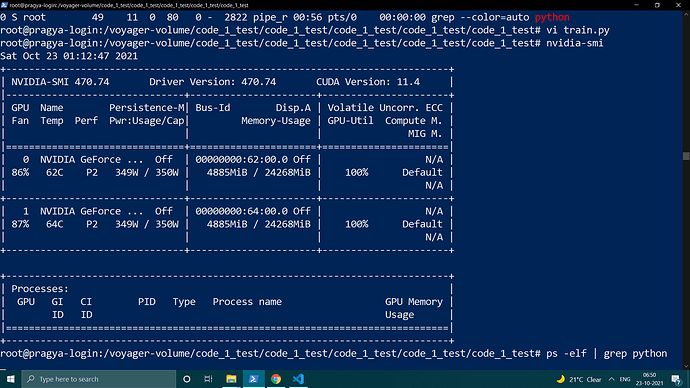

I restarted the training by kill all PIDS which were occupying GPU Memory but it didn’t help

Experiment dir : search-EXP-ab1–20211023-041336

10/23 04:13:36 AM gpu device = 0,1

10/23 04:13:36 AM args = Namespace(arch_learning_rate=0.0003, arch_weight_decay=0.001, batch_size=8, cutout=False, cutout_length=16, data=’/voyager-volume/code_1_test/Original_images’, drop_path_prob=0.3, epochs=50, gpu=‘0,1’, grad_clip=5, init_channels=16, is_parallel=1, layers=8, learning_rate=0.025, learning_rate_feature_extractor=0.025, learning_rate_head_g=0.025, learning_rate_min=0.001, model_path=‘saved_models’, momentum=0.9, num_classes=31, report_freq=50, save=‘search-EXP-ab1–20211023-041336’, seed=2, source=‘amazon’, target=‘dslr’, train_portion=0.5, unrolled=False, weight_decay=0.0003, weight_decay_fe=0.0003, weight_decay_hg=0.0003)

10/23 04:55:44 AM param size = 0.297522MB

10/23 04:55:44 AM epoch 0 lr 2.500000e-02

10/23 04:55:44 AM genotype = Genotype(normal=[(‘max_pool_3x3’, 1), (‘skip_connect’, 0), (‘max_pool_3x3’, 0), (‘skip_connect’, 2), (‘dil_conv_5x5’, 2), (‘dil_conv_5x5’, 0), (‘sep_conv_3x3’, 1), (‘dil_conv_3x3’, 4)], normal_concat=range(2, 6), reduce=[(‘avg_pool_3x3’, 1), (‘sep_conv_3x3’, 0), (‘dil_conv_3x3’, 1), (‘dil_conv_3x3’, 0), (‘skip_connect’, 2), (‘max_pool_3x3’, 1), (‘max_pool_3x3’, 3), (‘dil_conv_3x3’, 2)], reduce_concat=range(2, 6))

tensor([[0.1250, 0.1250, 0.1247, 0.1251, 0.1250, 0.1251, 0.1250, 0.1251],

[0.1250, 0.1252, 0.1249, 0.1250, 0.1251, 0.1251, 0.1248, 0.1249],

[0.1249, 0.1253, 0.1250, 0.1249, 0.1249, 0.1250, 0.1250, 0.1249],

[0.1251, 0.1249, 0.1250, 0.1251, 0.1250, 0.1249, 0.1250, 0.1251],

[0.1249, 0.1247, 0.1250, 0.1252, 0.1249, 0.1251, 0.1251, 0.1250],

[0.1249, 0.1250, 0.1250, 0.1251, 0.1250, 0.1250, 0.1248, 0.1253],

[0.1251, 0.1250, 0.1250, 0.1251, 0.1248, 0.1251, 0.1250, 0.1249],

[0.1252, 0.1250, 0.1249, 0.1249, 0.1250, 0.1249, 0.1251, 0.1251],

[0.1250, 0.1250, 0.1251, 0.1251, 0.1251, 0.1250, 0.1249, 0.1248],

[0.1250, 0.1252, 0.1249, 0.1250, 0.1251, 0.1249, 0.1248, 0.1251],

[0.1249, 0.1249, 0.1250, 0.1250, 0.1252, 0.1250, 0.1250, 0.1250],

[0.1251, 0.1249, 0.1249, 0.1250, 0.1249, 0.1252, 0.1251, 0.1251],

[0.1250, 0.1251, 0.1251, 0.1250, 0.1250, 0.1251, 0.1249, 0.1249],

[0.1251, 0.1247, 0.1249, 0.1251, 0.1252, 0.1249, 0.1253, 0.1249]],

device=‘cuda:0’, grad_fn=)

tensor([[0.1252, 0.1251, 0.1250, 0.1249, 0.1251, 0.1249, 0.1249, 0.1250],

[0.1249, 0.1248, 0.1251, 0.1250, 0.1250, 0.1251, 0.1251, 0.1250],

[0.1251, 0.1249, 0.1249, 0.1251, 0.1249, 0.1250, 0.1251, 0.1250],

[0.1251, 0.1249, 0.1249, 0.1250, 0.1250, 0.1249, 0.1251, 0.1251],

[0.1249, 0.1251, 0.1248, 0.1250, 0.1250, 0.1250, 0.1251, 0.1251],

[0.1251, 0.1248, 0.1251, 0.1251, 0.1250, 0.1249, 0.1250, 0.1250],

[0.1250, 0.1251, 0.1250, 0.1251, 0.1249, 0.1249, 0.1250, 0.1249],

[0.1249, 0.1251, 0.1251, 0.1252, 0.1249, 0.1251, 0.1248, 0.1248],

[0.1252, 0.1250, 0.1250, 0.1251, 0.1247, 0.1249, 0.1252, 0.1250],

[0.1251, 0.1247, 0.1250, 0.1251, 0.1249, 0.1251, 0.1250, 0.1252],

[0.1250, 0.1249, 0.1249, 0.1251, 0.1250, 0.1252, 0.1250, 0.1249],

[0.1249, 0.1251, 0.1249, 0.1250, 0.1251, 0.1250, 0.1251, 0.1249],

[0.1250, 0.1252, 0.1247, 0.1247, 0.1249, 0.1252, 0.1250, 0.1251],

[0.1250, 0.1252, 0.1250, 0.1251, 0.1250, 0.1249, 0.1248, 0.1250]],

device=‘cuda:0’, grad_fn=)

/opt/conda/lib/python3.6/site-packages/torch/tensor.py:292: UserWarning: non-inplace resize_as is deprecated

warnings.warn(“non-inplace resize_as is deprecated”)

Traceback (most recent call last):

File “train.py”, line 363, in

main()

File “train.py”, line 181, in main

train_acc, train_obj = train(source_train_loader,source_val_loader,target_train_loader,target_val_loader, criterion,optimizer,optimizer_fe, optimizer_hg,lr,feature_extractor,head_g,model,architect,args.batch_size)

File “train.py”, line 219, in train

_,domain_logits=model(input_img_source)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 489, in call

result = self.forward(*input, **kwargs)

File “/voyager-volume/code_1_test/code_1_test/code_1_test/code_1_test/code_1_test/model_search.py”, line 159, in forward

s0, s1 = s1, cell(s0, s1, weights,weights2)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 489, in call

result = self.forward(*input, **kwargs)

File “/voyager-volume/code_1_test/code_1_test/code_1_test/code_1_test/code_1_test/model_search.py”, line 85, in forward

s = sum(weights2[offset+j]*self._ops[offset+j](h, weights[offset+j]) for j, h in enumerate(states))

File “/voyager-volume/code_1_test/code_1_test/code_1_test/code_1_test/code_1_test/model_search.py”, line 85, in

s = sum(weights2[offset+j]*self._ops[offset+j](h, weights[offset+j]) for j, h in enumerate(states))

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 489, in call

result = self.forward(*input, **kwargs)

File “/voyager-volume/code_1_test/code_1_test/code_1_test/code_1_test/code_1_test/model_search.py”, line 44, in forward

temp1 = sum(w * op(xtemp) for w, op in zip(weights, self._ops))

RuntimeError: CUDA out of memory. Tried to allocate 2.00 MiB (GPU 0; 23.70 GiB total capacity; 22.83 GiB already allocated; 2.56 MiB free; 523.00 KiB cached)

-never had these kind of problems with my ubuntu pc

-never had these kind of problems with my ubuntu pc