Hi, I’m trying to to run a big model (resnext-wsl 32x48d) on 8 GPUs with 12GB each, but I’m getting a memory error:“RuntimeError: CUDA out of memory. Tried to allocate 42.00 MiB (GPU 0; 11.75 GiB total capacity; 10.28 GiB already allocated; 2.94 MiB free; 334.31 MiB cached)”

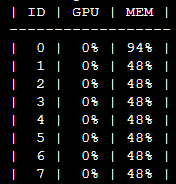

It seems that the data-parallel is splitting the memory in un even way, as can be shown in the memory layout printed a moment before crashing (before optimizer.step):

As can be seen, GPU0 is overloaded while the rest still have some space.

Is there anyway to solve this problem? is it possible that this model cannot be trained on gpus with “only” 12GB?

Thanks.