Hi,

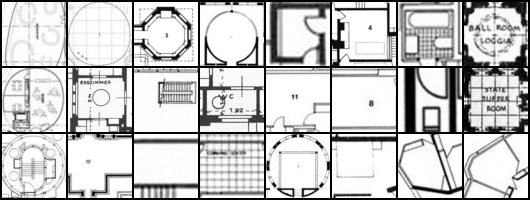

I’m trying to get close to an object localization like Efficient Object Localization Using Convolutional Networks but with an un-labeled dataset. The features are somewhat simple:

So I was thinking I could hand-engineer a few examples (or use a GAN to create generalized versions) of kernels and use them as the basis for feature extraction, but I’m not sure how to get the location of the maximum response to the kernels. I have a couple ideas…

Is it possible to have nn.Conv2d return the convolution windows as a tensor? I was thinking I could take the maximally responding windows and put points at the location of the max value of the window as candidate points

Is it possible to differentiate a whole image with autograd? My other thought was that taking the curvature map of the resulting convolution would yield ~0 curvature around the maximum responses, esp. if the resulting tensor is clipped to some range.

Is there an efficient way to stack sliding windows of an image into the batch channel? I have a classifier trained on these features so theoretically I could just reorganize the image and then take the maximally-confident outputs of the classifier as the candidate location points. I was trying to use torch.chunk() for this but that is really annoying.

Thanks for any help.