Hi all,

I am a newbie in Pytorch. When I select GPU by os.environ[“CUDA_VISIBLE_DEVICES”], I got a weird and very annoying error when running this code:

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "1"

import torch

arr = [[2, 573, 2119, 1, 1, 0, 1441, 1, 2119, 2, 0, 0, 1, 0, 0]]

b = torch.tensor(arr, dtype=torch.int64, device='cuda')

print (b)

assert b.sum().item() > 0

Supposedly, b must be tensor([[ 2, 573, 2119, 1, 1, 0, 1441, 1, 2119, 2, 0, 0, 1, 0, 0]], device=‘cuda:0’). But somehow, sometimes b becomes tensor([[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]], device=‘cuda:0’).

MY CUDA version: Cuda compilation tools, release 8.0, V8.0.44

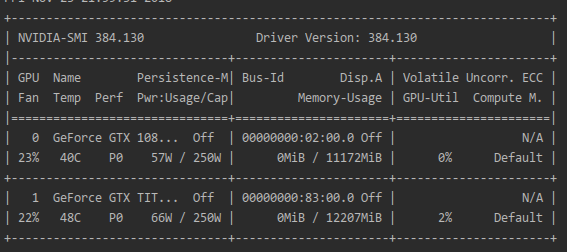

This is my GPU detail:

Thank you so much for your help!!