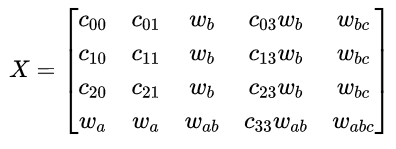

I’m trying to build a Hidden Markov Model in PyTorch where I specify a transition matrix as a set of weights I’d like to update. The tensor looks something like:

I’m trying to solve for the w’s in this tensor while holding the c’s fixed (that is, they cannot be updated during optimization). The constraint I am solving under is that the row sums of the tensor is 1 (X.sum(axis=1) = [1, 1, 1, 1]).

I guess two things that aren’t obvious to me are (1) how to fix specific values of a Tensor (prevent them from updating during optimization or factoring into the error function?) (2) how to symbolically represent certain weights (notice that w_{b} is repeated at multiple entries!). At first I confused myself into thinking this is a system of linear equations that I can solve, but now I am thinking maybe I need to use a deep learning framework to approximate these values. Does PyTorch have this flexibility?