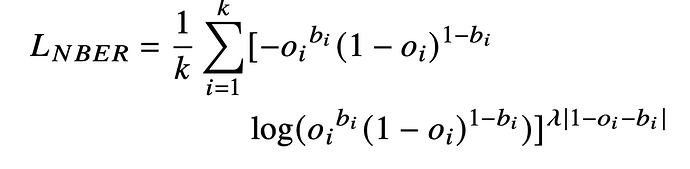

I have been trying to apply a new custom Loss function with an algorithm that works with BCEwithLogists

where

$o_i$ represents the sigmoid of the decoded values and $b_i$ represents the correct labels.

eps = 1e-10

def NBER_Loss(decoded_value, correct_result, custom_lambda=1):

decoded_value = decoded_value.sigmoid().clamp(eps, 1-eps)

loss1 = (torch.pow(decoded_value, correct_result) *torch.pow(1-decoded_value, 1-correct_result)).clamp(eps, 1-eps)

loss = torch.mean(torch.pow(-loss1*torch.log2(loss1).clamp(-100.0, 0.0),

custom_lambda*torch.abs(1-decoded_value-correct_result)+eps))

return loss

The output is as follows:

decoded pre sigmoid tensor([[ -55.0442, 55.0442, -55.0442, ..., -3.9323, -9.0391,

3.9323],

[ 129.7956, 69.6005, -62.5831, ..., -0.9936, -0.9936,

-6.7401],

[-111.1677, 111.1677, 77.0329, ..., -2.4284, -9.3211,

2.8110],

...,

[ 190.0725, 139.7126, 111.0150, ..., -29.0303, 28.2014,

27.1241],

[ 128.6071, 90.6857, -82.5502, ..., -6.8028, -4.6547,

-4.6547],

[-150.4684, -150.4684, 150.4684, ..., -5.2145, -8.1823,

5.2145]], device='cuda:0', grad_fn=<CopySlices>)

decoded post sigmoid tensor([[1.0000e-10, 1.0000e+00, 1.0000e-10, ..., 1.9222e-02, 1.1866e-04,

9.8078e-01],

[1.0000e+00, 1.0000e+00, 1.0000e-10, ..., 2.7021e-01, 2.7021e-01,

1.1812e-03],

[1.0000e-10, 1.0000e+00, 1.0000e+00, ..., 8.1032e-02, 8.9505e-05,

9.4327e-01],

...,

[1.0000e+00, 1.0000e+00, 1.0000e+00, ..., 1.0000e-10, 1.0000e+00,

1.0000e+00],

[1.0000e+00, 1.0000e+00, 1.0000e-10, ..., 1.1094e-03, 9.4274e-03,

9.4274e-03],

[1.0000e-10, 1.0000e-10, 1.0000e+00, ..., 5.4077e-03, 2.7949e-04,

9.9459e-01]], device='cuda:0', grad_fn=<ClampBackward1>)

Loss0 tensor([[1.0000e+00, 1.0000e-10, 1.0000e+00, ..., 9.8078e-01, 1.1866e-04,

1.9222e-02],

[1.0000e+00, 1.0000e+00, 1.0000e+00, ..., 2.7021e-01, 2.7021e-01,

9.9882e-01],

[1.0000e+00, 1.0000e+00, 1.0000e+00, ..., 8.1032e-02, 9.9991e-01,

5.6732e-02],

...,

[1.0000e+00, 1.0000e+00, 1.0000e+00, ..., 1.0000e+00, 1.0000e+00,

1.0000e+00],

[1.0000e-10, 1.0000e-10, 1.0000e-10, ..., 9.9889e-01, 9.9057e-01,

9.4274e-03],

[1.0000e+00, 1.0000e+00, 1.0000e+00, ..., 9.9459e-01, 9.9972e-01,

9.9459e-01]], device='cuda:0', grad_fn=<ClampBackward1>)

Loss tensor(0.2589, device='cuda:0', grad_fn=<MeanBackward0>)

[W python_anomaly_mode.cpp:104] Warning: Error detected in MulBackward0. Traceback of forward call that caused the error:

File "xxx.py", line 835, in <module>

loss = NBER_Loss(decoded_bits, 0.5 *

File "xxx.py", line 780, in NBER_Loss

loss = torch.mean(torch.pow(-loss1*torch.log2(loss1).clamp(-100.0, 0.0),

(function _print_stack)

Traceback (most recent call last):

File "xxx.py", line 837, in <module>

loss.backward()

File "/home/xxx/.local/lib/python3.8/site-packages/torch/_tensor.py", line 255, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)

File "/home/xxx/.local/lib/python3.8/site-packages/torch/autograd/__init__.py", line 147, in backward

Variable._execution_engine.run_backward(

RuntimeError: Function 'MulBackward0' returned nan values in its 0th output.

Any idea where this error might be coming from?