Hello everyone,

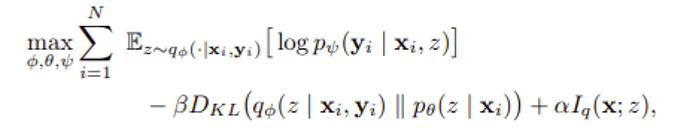

I’m trying to understand a CVAE implemented in a paper and it just does not make sense to me, that the prior for z in the ELBO depends on X. Isn’t the prior supposed to be an assumption before you have seen the data?

Unlike typical autoencoders this one does not aim to reconstruct x with x_hat, but to produce a different output named y. So my hypothesis is. that the idea is not to approximate p with q but actually the other way around, because during inference the labels won’t be available. So we will probably use the prior during inference. The KL term makes sure, that p produces a smiliar z to q, without knowing y, while q does know y. So the neural network of p captures the relationship between x and y and makes y obsolete as an input. If that makes any sense…

My assumption is